The perfect dictatorship would have the appearance of a democracy, but would basically be a prison without walls in which the prisoners would not even dream of escaping. It would essentially be a system of slavery where, through consumption and entertainment, the slaves would love their servitudes.

The Chess Master's Opening Game ♛

In a climate-controlled room in Abu Dhabi, a refrigerator-sized chess computer once played endless games against its royal master. Sheikh Tahnoun bin Zayed al Nahyan, under the username zor_champ, would spend hours exploring the synthesis of human intuition and machine calculation. This wasn't mere recreation — it was research. What appeared to be a wealthy sheikh's expensive hobby was actually the first experiment in what would become the world's most sophisticated blueprint for technological control.

This was my first impression after reading Wired's article, "A Spymaster Sheikh Controls a $1.5 Trillion Fortune. He Wants to Use It to Dominate AI."

Despite being dismissed as a mere pastime, the Sheikh's interest in chess symbolized the beginning of a much more audacious strategy. As global attention lingered on the AI competition between the United States and China, the UAE, guided by Sheikh Tahnoun, has been methodically and discreetly establishing its presence on the global stage. Rather than merely engaging in this global contest, the UAE is fundamentally redefining the parameters of the game.

We're asking all the wrong questions about the UAE's artificial intelligence strategy. While Western analysts obsess over the geopolitical competition between the US and China, they're missing the emergence of something far more significant: the first coherent implementation of what I can only label as Computational Autocracy — a system where algorithmic governance and human control merge into something fundamentally new.

The Evolution of Control: From Chess to Society

Consider the evolution: From chess computers to surveillance systems to artificial intelligence infrastructure. This isn't merely technological progression; it's the systematic development of increasingly sophisticated mechanisms for shaping human behavior. The Hydra chess computer, far from being an eccentric passion project, was Tahnoun's first laboratory for understanding how machines could guide human decision-making.

"He loved the power of man plus machine," one engineer noted. This seemingly innocuous detail reveals everything about Tahnoun's vision for AI's role in governance.

The Three Phases of Control

Traditional autocracy operates through visible force and explicit control. Computational autocracy, by contrast, works through the subtle manipulation of Choice Architectures — the computational structures that shape how decisions are made. It doesn't prohibit behaviors; it makes them algorithmically improbable. It doesn't demand compliance; it makes compliance the path of least resistance.

The UAE's surveillance apparatus evolved through three distinct phases:

- The Crude Phase (2009-2013): BlackBerry exploits represented old-school surveillance — clumsy, detectable, and ultimately limited. Yet even these early attempts revealed a systematic approach to digital control.

- The Precision Phase (2014-2016): DarkMatter's operations showed increased sophistication in targeted monitoring. This period marked the transition from mass surveillance to algorithmic targeting.

- The Integration Phase (2017-present): ToTok revealed the true genius of computational autocracy — the seamless integration of surveillance into daily life.

The Mechanics of Control: From Theory to Practice

To understand how computational autocracy operates in practice, consider three interlocking systems:

1. The Digital Maze Effect

Imagine a maze where every path is technically open, but some routes are subtly wider, better lit, and more appealing. This is the essence of Algorithmic Choice Architecture. ToTok wasn't just another surveillance app — it was a masterclass in this principle. Like a digital architect designing not just the building but the way people move through it, ToTok created an environment where surveillance became the path of least resistance.

2. The Behavioral Feedback Loop

Think of a thermometer that doesn't just measure temperature but subtly influences it. Traditional surveillance systems observe behavior. Computational autocracy creates Behavioral Temperature Control — systems that simultaneously monitor and modify social behavior. The UAE's surveillance infrastructure doesn't just watch; it adjusts the environmental conditions that shape behavior.

3. The Scale Effect

When you scale up a small pond, you get a lake. But when you scale up a tidal pool, you get an ocean — complete with currents, weather systems, and entirely new phenomena. Similarly, when you scale up traditional surveillance, you get more monitoring. But when you scale up Computational Autocracy, you get qualitatively different systems of control through deeper systems of intelligence.

Consider these real-world manifestations:

- Digital Infrastructure: The UAE's smart city initiatives don't just collect data — they create environments where certain behaviors become computationally optimal while others become increasingly costly.

- Financial Systems: The integration of AI with banking and payment systems doesn't just track transactions — it shapes economic behavior through algorithmic incentives and friction points.

- Social Platforms: Government-approved social media platforms don't just monitor communication — they create digital spaces where certain types of interaction become naturally predominant while others fade into algorithmic shadows.

Beyond Traditional Surveillance

This reveals something profound about the future of power. Traditional analyses frame surveillance as a tool of autocracy. But what's emerging in Abu Dhabi suggests something more unsettling: surveillance isn't just a tool of control — it's becoming the fundamental infrastructure of governance itself.

The integration of artificial intelligence with this surveillance infrastructure creates possibilities that our current analytical frameworks struggle to comprehend. We're not witnessing the simple automation of existing control systems. We're seeing the emergence of Predictive Governance — systems designed not just to monitor behavior but to shape it before it occurs.

The Chess Master's Middle Game

This is where Tahnoun's chess obsession becomes particularly relevant. Chess engines don't just calculate moves — they shape the possibility space of future positions. Similarly, the UAE's AI infrastructure isn't designed merely to react to social behavior but to structure the environment in which behavior occurs. This is power projection at its most sophisticated: control through the architecture of possibility itself.

The Experimental Advantage

The UAE's $1.5 trillion sovereign wealth advantage isn't just financial capital — it's experimental capital. While Western democracies debate AI ethics and regulation, Abu Dhabi is building and testing real systems of algorithmic governance. The speed of implementation isn't just about efficiency — it's about the freedom to experiment without democratic constraints.

Consider the implications: While Western companies must navigate privacy concerns, ethical debates, and regulatory frameworks, the UAE can rapidly iterate on real-world implementations of AI-enabled social control. This isn't just about technological development — it's about the accumulation of practical knowledge in implementing these systems at scale.

The Regulatory Sandbox Fallacy

When Sam Altman suggests the UAE could serve as a "regulatory sandbox" for AI, he inadvertently reveals how thoroughly we've misunderstood the nature of what's being built. This isn't merely a testing ground for algorithms — it's a laboratory for perfecting algorithmic governance, where the boundaries between individual choice and systemic control become increasingly indistinguishable.

The Microsoft Blindspot

The Microsoft deal reveals another critical blindspot in our analysis. The focus on preventing technology transfer to China misses the more fundamental issue: we're actively helping build systems of technological control that could ultimately be more sophisticated than anything emerging from Beijing. The UAE isn't just buying technology — it's developing new models of technological governance that could be exported globally.

The Invisible Restructuring of Agency

The true danger of computational autocracy lies not in its obvious manifestations but in its invisible restructuring of human agency. When surveillance is embedded in every digital interaction, when AI systems subtly shape information flows and decision spaces, the very concept of autonomy becomes algorithmically conditioned.

The Automation of Choice

This isn't mere speculation — it's precisely what Tahnoun's chess computer experiments revealed about human-machine synthesis in decision-making. The system doesn't force decisions; it shapes the computational environment until certain choices become virtually inevitable.

The Future of Algorithmic Governance

The future being built in Abu Dhabi isn't just another variant of digital authoritarianism — it's potentially the prototype for a new kind of state, one where the distinction between governance and control, between compliance and coercion, becomes algorithmically optimized. This isn't just about better surveillance or more efficient autocracy — it's about the emergence of systems that could fundamentally reshape the relationship between state power and human behavior.

The Global Economic Engine: When Capital Shapes Control

The global implications of computational autocracy become particularly unsettling when we consider the role of capital. The UAE's $1.5 trillion sovereign wealth fund isn't just buying influence — it's reshaping the fundamental architecture of technological development. Think of it as a gravitational force in the AI universe, bending the trajectory of innovation toward systems of control.

The Investment-Control Nexus

Consider the incentives at play: When OpenAI's Sam Altman seeks trillions for chip development, or when Microsoft invests $1.5 billion in G42, they're not just making business decisions — they're helping build the infrastructure of algorithmic governance. It's like providing sophisticated architectural tools to someone designing a digital panopticon.

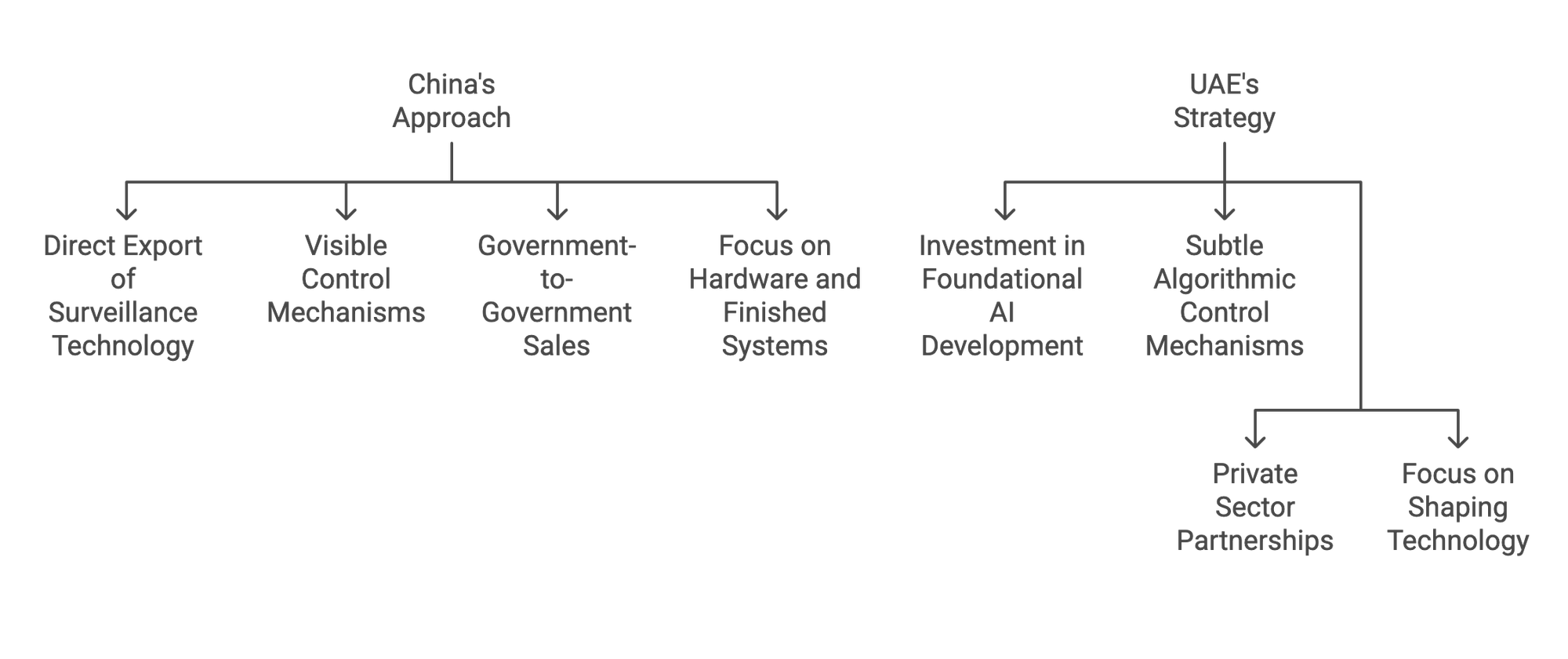

Comparative Models: UAE vs China

The UAE's approach represents a fascinating evolution beyond China's surveillance technology model. Consider the differences:

Think of it as the difference between selling fishing nets and redesigning the ocean itself. China exports the tools of surveillance, but the UAE is helping shape how digital spaces fundamentally function.

The Market Forces of Control

This creates Surveillance Market Dynamics — where the most profitable direction for AI development becomes increasingly aligned with sophisticated control mechanisms.

Consider these market effects:

- Investment Gravity

- Massive capital flows create irresistible development incentives

- Technology companies optimize for wealthy investors

- Control features become "premium" capabilities

- Innovation Channeling

- Research priorities align with surveillance capabilities

- Technical talent flows toward control-oriented projects

- Development resources concentrate in control systems

- Market Standardization

- Control features become industry standards

- Surveillance capabilities embed in basic infrastructure

- Control mechanisms normalize across platforms

The result is a kind of technological natural selection where systems optimized for control become increasingly dominant in the marketplace. It's not just about building better surveillance tools — it's about reshaping the entire technological ecosystem to make control the default configuration.

The Chess Master's Endgame

The most pressing question isn't whether the UAE will choose American or Chinese partnerships. It's whether we can recognize and respond to the implications of what's being built before these systems of control become the default infrastructure of governance worldwide. The chess master's game isn't about balancing between great powers — it's about developing new forms of power itself.

Critical Questions for the Future

- How do democratic societies compete with systems optimized for control?

- What happens when the technologies developed in this "sandbox" begin to propagate globally?

- Can we develop alternative models of AI governance that preserve human agency?

- How do we prevent the normalization of algorithmic control systems?

The game being played in Abu Dhabi isn't just about artificial intelligence or surveillance — it's about the future of human agency in an algorithmically governed world. The moves being made today will shape the possibility space of freedom for generations to come.

Courtesy of your friendly neighborhood,

🌶️ Khayyam

Member discussion