"The children now love luxury; they have bad manners, contempt for authority; they show disrespect for elders and love chatter in place of exercise. Children are now tyrants, not the servants of their households. They no longer rise when elders enter the room. They contradict their parents, chatter before company, gobble up dainties at the table, cross their legs, and tyrannize their teachers."

- Attributed to Socrates (469–399 B.C.)

When Digital Fairytales Become Real: Navigating the AI Wonderland of Childhood

Imagine a world where the boundaries of reality and imagination blend seamlessly with every swipe of a screen. For today's children, this isn't a dystopian fantasy—it's their daily reality. In an era where artificial intelligence can conjure lifelike images, videos, and text with alarming ease, young minds are traversing a digital landscape fraught with synthetic truths and artificial realities.

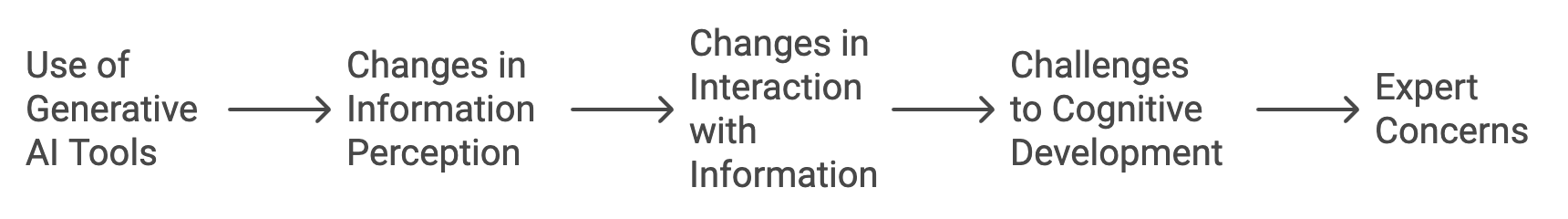

The statistics are staggering: a recent Ofcom report, 59% of children aged 7-17 in the UK have used generative AI tools in the past year, with that number jumping to 79% for teenagers aged 13-17. This digital immersion is reshaping how children perceive and interact with information, posing unprecedented challenges to their cognitive development.

"The rise of AI-generated content is not a technological shift, but a cognitive one, for developing minds," says Dr. Jennifer Radesky, Assistant Professor of Pediatrics at the University of Michigan. "It's reshaping how children perceive and interact with information in ways we're beginning to understand" (Radesky, 2023).

As we delve into this exploration, we'll unravel the intricate web of AI-generated content, its impact on children's developing minds, and the ripple effects that could shape our collective future. Welcome to the new frontier of childhood, where pixels paint perceptions and algorithms architect understanding.

Understanding AI-Generated False Information:

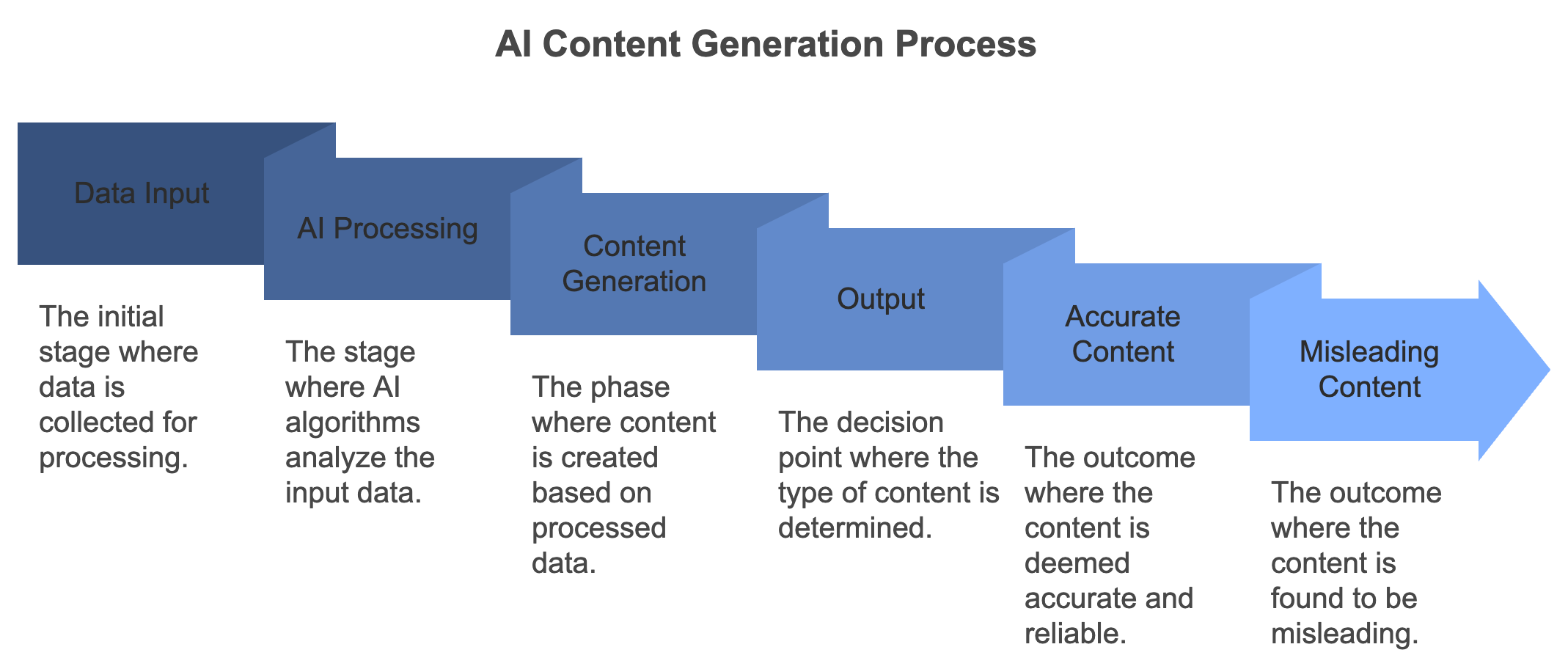

To grasp the magnitude of AI's influence, start with a relatable analogy. Imagine AI as a hyper-efficient kitchen staffed by robotic chefs. These chefs can whip up dishes (content) at lightning speed, using ingredients (data) from countless recipes (online information). Sometimes, however, they might mix up the salt with the sugar, creating dishes that look appetizing but taste off—or worse, are potentially harmful.

This is how AI generates false information. It processes vast amounts of data to create content that looks convincing but may be factually incorrect or misleading. Unlike human misinformation, the scale and speed at which this occurs are revolutionary.

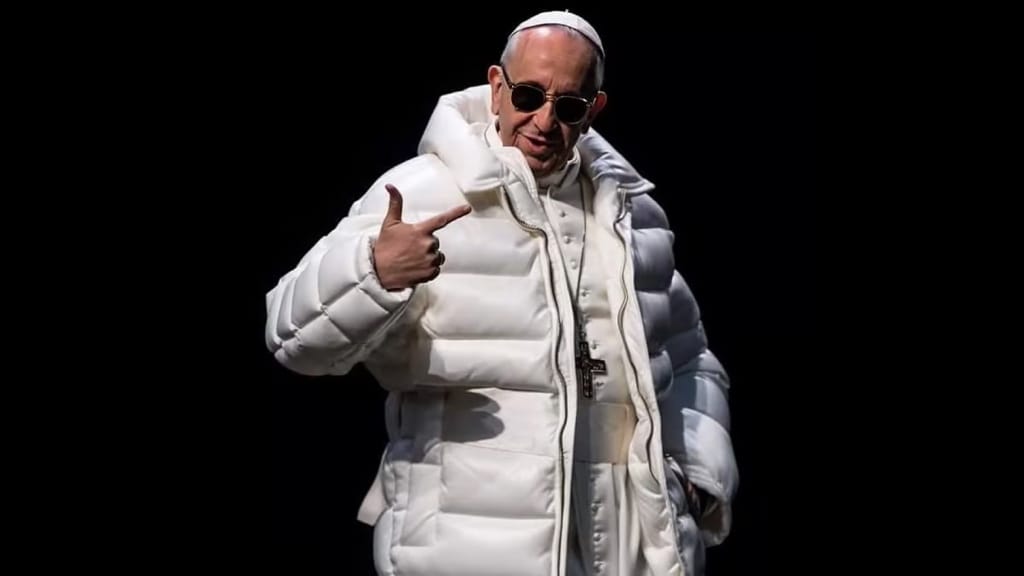

Real-world examples abound. In 2022, a teenager used AI to create a fake news website that spread misinformation about a non-existent Australian political scandal. The site garnered thousands of views before being debunked. More recently, AI-generated images of Pope Francis in a stylish white puffer jacket went viral, fooling millions into believing the religious leader had undergone a fashion makeover.

Compared to human-generated misinformation, AI-created falsehoods can be more pervasive and convincing. They can be produced at scale, tailored to specific audiences, and evolve in real-time based on user engagement. This makes them challenging for children to identify and resist.

The Cognitive Development Landscape:

To understand why children are vulnerable to AI-generated falsehoods, we must explore how young minds develop. Think of cognitive development as building a complex Lego structure. In the early years, children start with large, simple blocks (basic sensory experiences). As they grow, they add smaller, more intricate pieces (abstract thinking, logic), eventually creating a sophisticated structure (mature cognitive abilities).

Jean Piaget, a pioneering psychologist, outlined four main stages of this cognitive construction:

- Sensorimotor (0-2 years): Children learn through senses and motor actions.

- Preoperational (2-7 years): Development of language and symbolic thinking.

- Concrete Operational (7-11 years): Logical thinking about concrete objects.

- Formal Operational (11+ years): Abstract reasoning and hypothetical thinking.

"Children's cognitive structures are like scaffolding," explains Dr. Alison Gopnik, Professor of Psychology at UC Berkeley. "Each stage builds upon the last, but the structure remains flexible and vulnerable to external influences, including misinformation" (Gopnik, 2022).

Each stage represents a critical period where children's ability to process and evaluate information evolves. However, even in the later stages, the 'Lego structure' is under construction, making it susceptible to misinformation's influence.

A study in the journal "Developmental Science" found that children in the concrete operational stage (7-11 years) were more likely to believe false information when it was presented alongside true statements. This highlights how children's developing critical thinking skills can be overwhelmed by the sophisticated nature of AI-generated content.

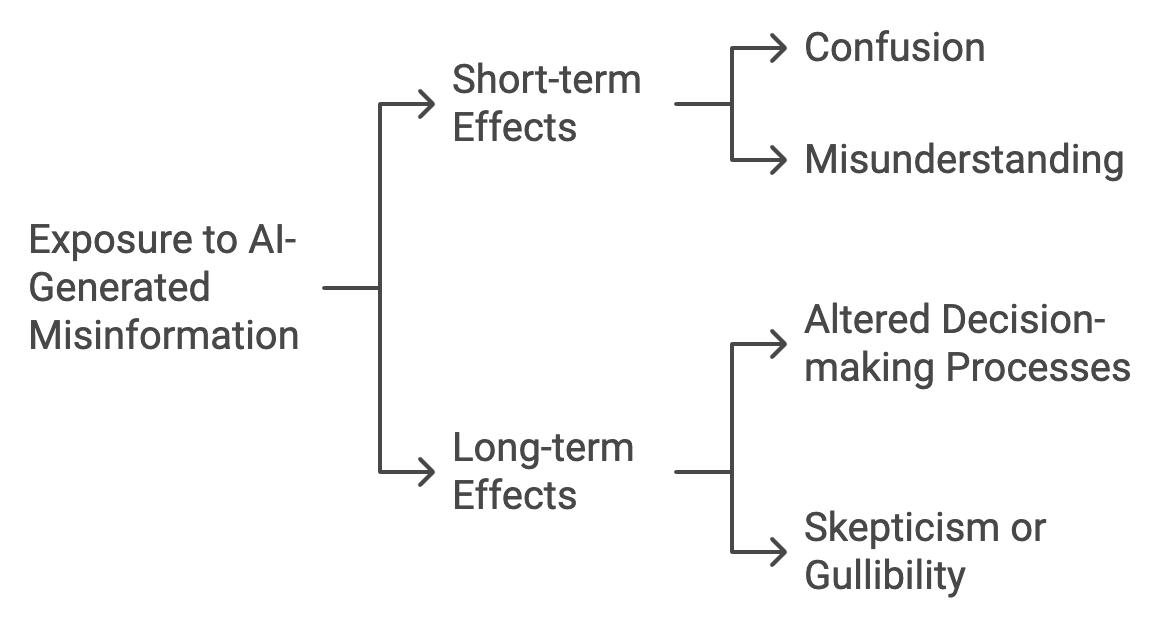

The Impact on Children's Cognition:

The collision of AI-generated falsehoods with developing minds has real-world consequences. Consider the case of 10-year-old Amelia from Boston. After watching AI-generated videos claiming that drinking sea water could give "special powers," she convinced her younger brother to join her in consuming large amounts of salt water at the beach, resulting in severe dehydration and hospitalization.

This incident illustrates how AI-generated misinformation can exploit children's natural curiosity and limited life experience. The convincing nature of AI-created content, combined with children's developing critical thinking skills, creates a perfect storm for misinformation to take root.

Studies have shown that exposure to misinformation can have lasting effects on memory and decision-making. A 2021 study in the "Journal of Experimental Child Psychology" found that children exposed to false information about a fictional event were more likely to incorporate that misinformation into their memories, even when later presented with the truth.

Long-term, this constant exposure to AI-generated falsehoods could reshape how children process information and make decisions. Dr. Emily Weinstein, a researcher at Harvard's Project Zero, warns that "repeated exposure to misinformation during formative years could lead to a generalized skepticism or, conversely, an inability to discern credible sources, impacting everything from academic performance to civic engagement."

The AI Misinformation Industry:

Behind the flood of AI-generated falsehoods lies a burgeoning industry. Tools like GPT-3, DALL-E, and others have democratized the creation of sophisticated fake content. What once required a team of skilled professionals can now be accomplished by a single individual with access to these AI tools.

"The economics of AI-generated misinformation are staggering," notes Dr. Filippo Menczer, Professor of Informatics and Computer Science at Indiana University. "The cost-benefit ratio for those spreading false information has never been more favorable, and that's a major concern" (Menczer & Hills, 2020).

The economic incentives are clear. Creating misinformation with AI is cheap and fast. A report by the Brookings Institution estimated that the cost of producing a deep fake video has dropped from about $10,000 to less than $30 in two years. This "savings" for misinformation spreaders translates to a massive cost for society in terms of trust erosion and the resources required to combat false information.

Compared to traditional media, where fact-checking is an integral (and expensive) part of the process, AI-generated content can be produced and disseminated with no oversight. The New York Times, for instance, employs a team of 17 full-time fact-checkers. In contrast, an AI system can generate thousands of articles in the time it takes a human fact-checker to verify a single story.

Combating the Challenge:

As the saying goes, "fight fire with fire." In this case, AI is being used to combat AI-generated misinformation. Think of it as a high-tech game of "spot the difference." AI systems are being trained to detect subtle inconsistencies in images, videos, and text that might indicate they're artificially generated.

"We're essentially in an arms race between AI-generated misinformation and AI-powered detection tools," says Hany Farid, Professor at UC Berkeley's School of Information. "The key is to stay ahead in this race while simultaneously building human resilience through education" (Farid, 2023).

For example, the AI-powered tool "Grover," developed by the Allen Institute for Artificial Intelligence, can detect neural fake news with 92% accuracy. Similarly, companies like Adobe are developing tools that can identify manipulated images and add verifiable metadata to track a photo's origins.

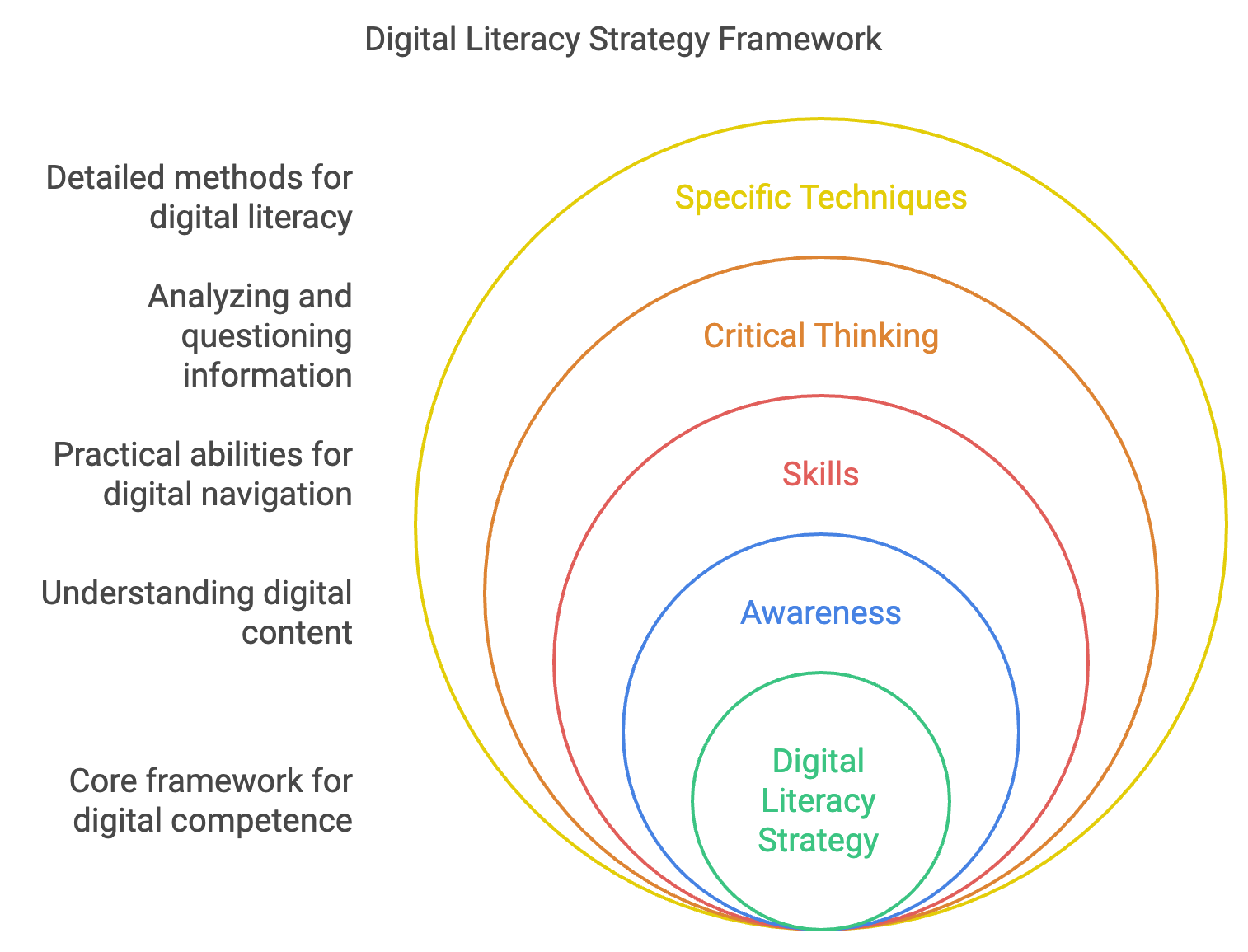

Education is another crucial front in this battle. We need to "vaccinate" children against misinformation by boosting their digital literacy and critical thinking skills. Programs like Finland's anti-fake news initiative, which starts teaching media literacy in elementary school, have shown promising results. The program uses games and exercises to teach children how to spot misleading information, understand the motivations behind it, and fact-check.

Regulatory measures are being implemented. The European Union's Digital Services Act, for instance, requires large online platforms to assess and mitigate risks related to the dissemination of disinformation. While these measures are a step in the right direction, their effectiveness in protecting children specifically remains to be seen.

The Role of Parents and Educators:

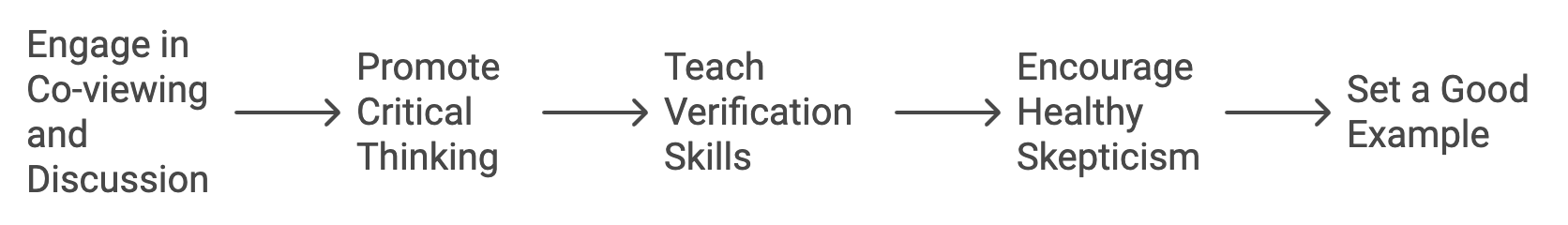

Parents and educators are on the front lines of this digital battlefield. They play a crucial role in guiding children through the complex landscape of online information. Here are strategies they can employ:

- Co-viewing and discussion: Engage with children about the content they consume online. Ask questions and encourage critical thinking.

- Teaching verification skills: Show children how to cross-reference information and check sources.

- Promoting a healthy skepticism: Encourage children to question what they see online without becoming overly cynical.

- Setting a good example: Demonstrate responsible digital behavior and information consumption.

Tools like Common Sense Media's Digital Citizenship Curriculum and Google's Be Internet Awesome program offer resources for parents and educators to teach these crucial skills.

Token Wisdom

As we navigate this brave new world of AI-generated content, the stakes for our children's cognitive development have never been higher. The pixels on their screens are not just images—they're the building blocks of their perception of reality.

Addressing this challenge requires a concerted effort from technologists, educators, policymakers, and parents. By fostering critical thinking, leveraging technology responsibly, and creating a supportive environment for digital exploration, we can help our children build the cognitive tools they need to thrive in an AI-driven world.

The future of truth is being written in code, but with the right approach, we can ensure that our children's understanding of reality remains grounded in fact, not fiction. As we continue to unravel AI's influence on children's reality, one thing is clear: the most powerful filter against misinformation is not an algorithm, but a well-prepared mind.

References:

- Farid, H. (2023). "The AI-Powered Disinformation Age." IEEE Spectrum, 60(6), 30-35.

- Gopnik, A. (2022). "The Gardener and the Carpenter: What the New Science of Child Development Tells Us About the Relationship Between Parents and Children." Farrar, Straus and Giroux.

- Menczer, F., & Hills, T. (2020). "Information Overload Helps Fake News Spread, and Social Media Knows It." Scientific American.

- Radesky, J. (2023). "Digital Media and Child Development in the AI Age." Pediatrics, 151(4).

Don't forget to check out the weekly roundup: It's Worth A Fortune!

Member discussion