"All warfare is based on deception."

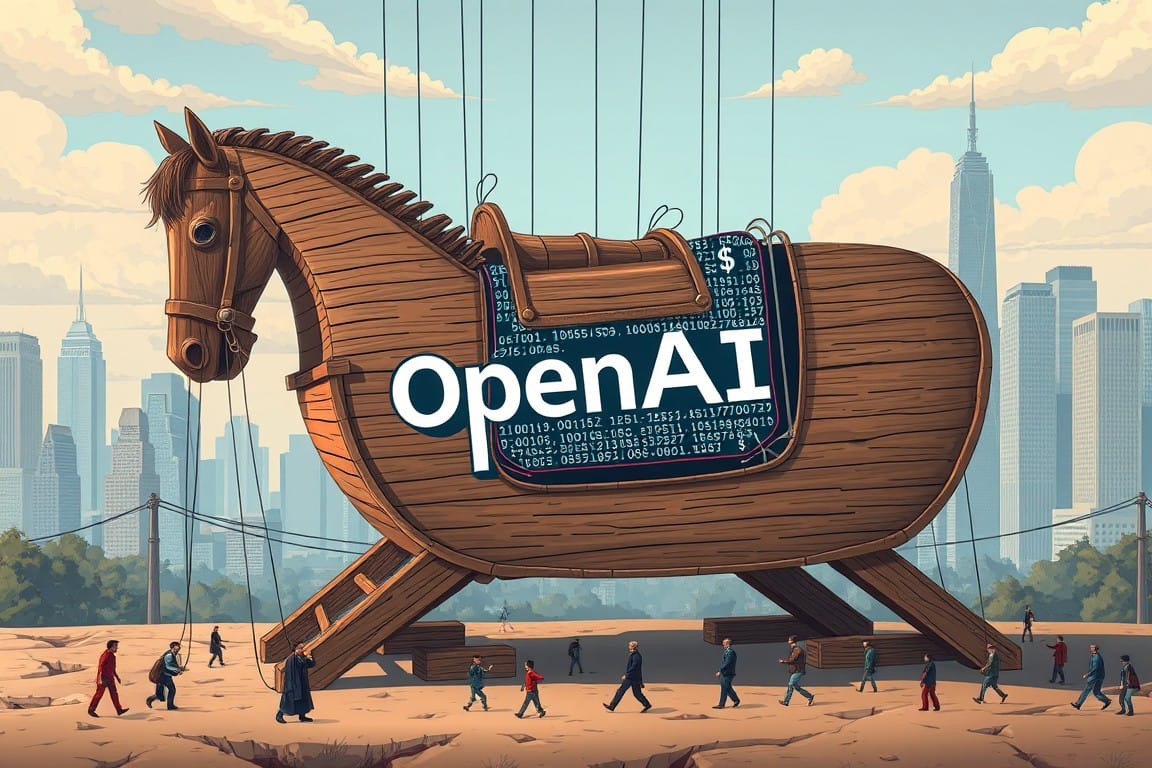

Sam's Lil Trojan Horse

In November 2023, OpenAI's leadership crisis exposed more than corporate drama—it revealed the systematic dismantling of what may be the most ambitious experiment in technical governance of our time. While media coverage fixated on Sam Altman's 72-hour exile and return, a more profound story was unfolding: the transformation of an idealistic nonprofit into a case study of institutional capture.

The Inherent Paradox of Defensive Institution Building

OpenAI's founding premise contained a fundamental contradiction that would ultimately prove fatal to its original mission. The organization was created to prevent the concentration of AI power, yet required precisely such concentration to achieve its objectives. This paradox manifests in three critical dimensions:

First, the scaling hypothesis of AI development creates an inexorable push toward resource consolidation. The computational requirements for advancing AI capabilities demand massive capital investment, creating what we might term a "compute-capital trap." Any organization seeking to remain competitive in AI development must either secure enormous financial resources or fall behind - there is no middle ground.

Second, the nonprofit structure, intended as a safeguard against corporate capture, actually created unique vulnerabilities. Traditional corporate governance mechanisms, while imperfect, have evolved sophisticated checks and balances through decades of practical application. The nonprofit model, particularly when applied to a technologically competitive field, lacks these refined mechanisms for balancing mission preservation with operational necessities.

Third, and perhaps most critically, the organization's defensive posture - being created to prevent something rather than achieve something - created what I term "mission-drift susceptibility." When an organization's primary purpose is preventive rather than generative, it becomes particularly vulnerable to incremental compromises that can ultimately undermine its founding principles.

The Psychology of Power and Persuasion

Paul Graham's oft-quoted assessment of Sam Altman—that he could be "parachuted onto an island of cannibals and return as their king"—reveals more about Silicon Valley's power dynamics than its advocates might intend. What Graham frames as mere persuasive ability represents something far more concerning: the capacity to systematically dismantle institutional safeguards through calculated manipulation. What does it actually mean to achieve dominance over any group so quickly? It suggests a capability for rapid power accumulation that transcends cultural and ethical boundaries - a concerning trait when the stakes involve artificial general intelligence.

The evidence suggests something far more calculated than mere charisma. The systematic withholding of critical information from the board represents not merely strategic ambiguity but what I would term "informational warfare." The described pattern suggests a deliberately constructed information ecosystem designed to maintain power asymmetry. This isn't persuasion - it's architectural deception.

The Technical Brain Drain and AGI Race

Perhaps the most telling indicator of OpenAI's transformation is the systematic exodus of technically sophisticated founding members and early employees. This pattern suggests not merely individual career decisions but a structural rejection of the organization's evolved form by those most committed to its original mission. The departure of figures like Ilia Sutskever, John Schulman, and others represents more than typical Silicon Valley talent circulation. These individuals possessed both deep technical expertise and strong commitment to AI safety concerns.

Here's where we must confront an uncomfortable possibility: What if this isn't merely about corporate control or financial gain? The systematic dismantling of safety teams and the reported "starving" of compute resources for alignment research suggests something more concerning - a deliberate acceleration toward AGI at the expense of safety considerations.

The evidence of systematic deception is compelling:

- Concealment of OpenAI startup fund ownership

- Misrepresentation of safety protocols

- Strategic information fragmentation to board members

- Systematic removal of safety-focused personnel and regulatory bodies

These aren't the actions of a merely persuasive leader - they represent a coordinated campaign of institutional capture. It's what I would do if I thought I wouldn't get caught because I've performed personal favors for most of the people I need to to protect my personhood like a prophylactic. It's sad, just like the image of a used prophylactic clinging to the side of the wastepaper basket for the staff to discover and clean up after. Ew.

The Speed-Safety Tradeoff and Win-at-All-Costs Mentality

The systematic dismantling of safety protocols while maintaining public commitment to AI safety suggests an "ethical doublethink" - the ability to simultaneously pursue conflicting objectives while maintaining plausible deniability. This raises a disturbing question: Has the race for AGI created incentives so powerful that they override even explicitly designed ethical constraints? That was rhetorical.

A particularly concerning aspect is the apparent prioritization of development speed over safety considerations. The reported starving of compute resources for alignment research while maintaining aggressive development schedules suggests a deliberate choice to prioritize capabilities over safety. This represents a sacrifice in alignment for the conscious decision to reduce safety considerations in favor of development speed.

The Manipulation of Organizational DNA

What's particularly fascinating is how the organization's original mission - preventing dangerous AI concentration - was gradually redefined while maintaining the appearance of continuity. This represents a CRISPR level "mission DNA manipulation" - the gradual alteration of an organization's fundamental nature while preserving its surface-level characteristics.

The Microsoft investment deserves particular scrutiny as it represents a masterclass in progressive institutional capture. The structure of the deal - with its apparent safeguards and AGI access limitations - created an illusion of mission preservation while fundamentally altering the organization's incentive structure.

The Progress Paradox and Future Implications

Perhaps the most profound insight from this case study is what I'll term the "progress paradox" - the observation that technical advancement in AI seems to inherently undermine the very structures designed to ensure its safe development. This raises uncomfortable questions about the feasibility of safe AGI development under current institutional frameworks.

The implications for future governance attempts are severe:

- Traditional oversight mechanisms are fundamentally inadequate for managing advanced technical development

- Information asymmetry can be weaponized to neutralize governance structures

- Commercial incentives will inevitably corrupt safety-focused institutions unless new models are developed

- Technical expertise alone is insufficient protection against institutional capture

The Mechanics of Technical Displacement

What demands particularly rigorous examination is the systematic process through which technical expertise was methodically marginalized within OpenAI. This wasn't merely organizational restructuring - it represents a calculated displacement of knowledge-based authority with management-based control systems.

The pattern reveals several critical mechanisms:

First, the progressive isolation of technical teams from strategic decision-making, accomplished through "administrative encapsulation." Technical experts found themselves increasingly confined to narrow operational roles while broader strategic decisions were monopolized by management layers with limited technical understanding but sophisticated political acumen.

Second, the systematic replacement of technical metrics with commercial key performance indicators (KPIs). This represents more than simple goal displacement - it fundamentally altered the epistemological framework through which the organization evaluated success. Technical concerns about alignment and safety were gradually subordinated to metrics that could be more easily understood by commercial partners and investors.

Third, the creation of parallel power structures that effectively neutralized technical authority without explicitly challenging it. Technical leaders maintained their titles and apparent authority while actual decision-making power was quietly relocated to newly created management positions with deliberately ambiguous responsibilities but clear reporting lines to commercial leadership.

The Microsoft Partnership: A Study in Institutional Capture

The Microsoft investment deserves far more critical examination than it has received, as it represents a masterclass in what I term "progressive institutional capture." The structure of the deal reveals sophisticated mechanisms for altering organizational DNA while maintaining the appearance of mission continuity.

The financial architecture of the partnership deserves particular scrutiny. The $10 billion investment, while seemingly straightforward, introduced subtle but profound alterations to OpenAI's incentive structures:

- The creation of milestone-based funding releases effectively transferred strategic priority-setting to commercial interests

- The integration of technical infrastructure created dependencies that would be progressively harder to disentangle

- The introduction of commercial success metrics gradually displaced safety-focused evaluation frameworks

What's particularly fascinating is how the partnership altered internal power dynamics without explicit governance changes. The mere presence of Microsoft's technical infrastructure created gravitational effects on research priorities, pulling resources and attention toward commercially promising directions and away from safety-focused initiatives.

The partnership also introduced a "governance mimesis" which is essentially, the subtle replication of corporate governance patterns within the nonprofit structure. This manifested through:

- The adoption of corporate planning and reporting frameworks

- The gradual alignment of research priorities with commercial partner interests

- The introduction of commercial success metrics into technical evaluation processes

The Illusion of Distributed Control

Perhaps most insidious is how the very mechanisms designed to ensure distributed control were weaponized to enable centralized power accumulation. This represents a fascinating case study in the use of ostensibly democratic structures to enable autocratic control.

The nonprofit board structure, intended to provide broad stakeholder representation, instead created perfect conditions for information asymmetry exploitation. Board members, lacking daily operational involvement and deep technical understanding, found themselves increasingly dependent on management's selective information sharing.

The capped-profit structure, supposedly ensuring broad benefit distribution, actually created perverse incentives. The 100x return cap, while appearing to limit profit motivation, instead encouraged aggressive value extraction within those constraints. This transformed what was meant to be a ceiling into an implicit floor - a target to be reached as quickly as possible.

Most critically, the distributed governance model itself became a shield against accountability. The very complexity of the structure made it difficult for any single stakeholder group to maintain effective oversight, creating perfect conditions for "responsibility diffusion" - a state where everyone and no one is simultaneously responsible for maintaining the original mission.

Critical Thoughts, Conclusions and Questions

OpenAI's transformation represents more than a cautionary tale—it may be a preview of how every attempt at ethical AI governance could unravel under the combined pressures of market forces, technological acceleration, and human psychology. The systematic dismantling of its founding principles suggests that our current models of technical governance are fundamentally inadequate for managing transformative technologies. The pattern of behavior suggests not just strategic brilliance but a concerning willingness to sacrifice safety considerations in the race toward AGI.

This case study should serve as a warning: The combination of technological power, financial incentives, and human psychology may create forces that no organizational structure can reliably constrain. The tools and frameworks we've inherited from previous eras of institutional design may simply be inadequate for the challenges posed by artificial intelligence development.

The questions we must confront are profound:

- Can any organizational structure truly maintain ethical constraints in the face of such powerful incentives?

- Is the race toward AGI inherently corrupting?

- Do we need to fundamentally rethink our approach to technical governance?

We are witnessing not just the failure of one organization's governance model, but potentially the inadequacy of all current institutional frameworks for managing transformative AI development. OpenAI's trajectory suggests that traditional governance structures—whether nonprofit, corporate, or hybrid—may be fundamentally incapable of maintaining ethical constraints once certain technological thresholds are crossed. This demands not merely reform, but a complete reimagining of how we structure and control organizations developing potentially civilization-altering technologies.

Courtesy of your friendly neighborhood,

🌶️ Khayyam

Member discussion