According to Claude Shannon,

"I visualize a time when we will be to robots what dogs are to humans, and I'm rooting for the machines."

This week's essay stems from a growing concern I've harbored about our increasing dependence on artificial intelligence in daily cognitive tasks. The catalyst for this piece was my own attempt to write an op-ed about the dangers of AI, only to realize I had become reliant on the very technology I was critiquing.

By exploring this phenomenon, I hope to initiate a necessary dialogue about how we can harness the benefits of AI without compromising our ability to think independently. This piece invites readers to consider the broader implications of AI-assisted cognition on our intellectual processes and decision-making capabilities.

Through my ironic journey of navigating the blurry line between human thought and AI-generated content, I aim to shed light on the subtle ways we may be outsourcing our critical thinking skills. The essay serves as both a cautionary tale and a call for conscious engagement with AI tools.

Silicon vs. Carbon

A concerned intellectual's journey into the very problem he's trying to solve.

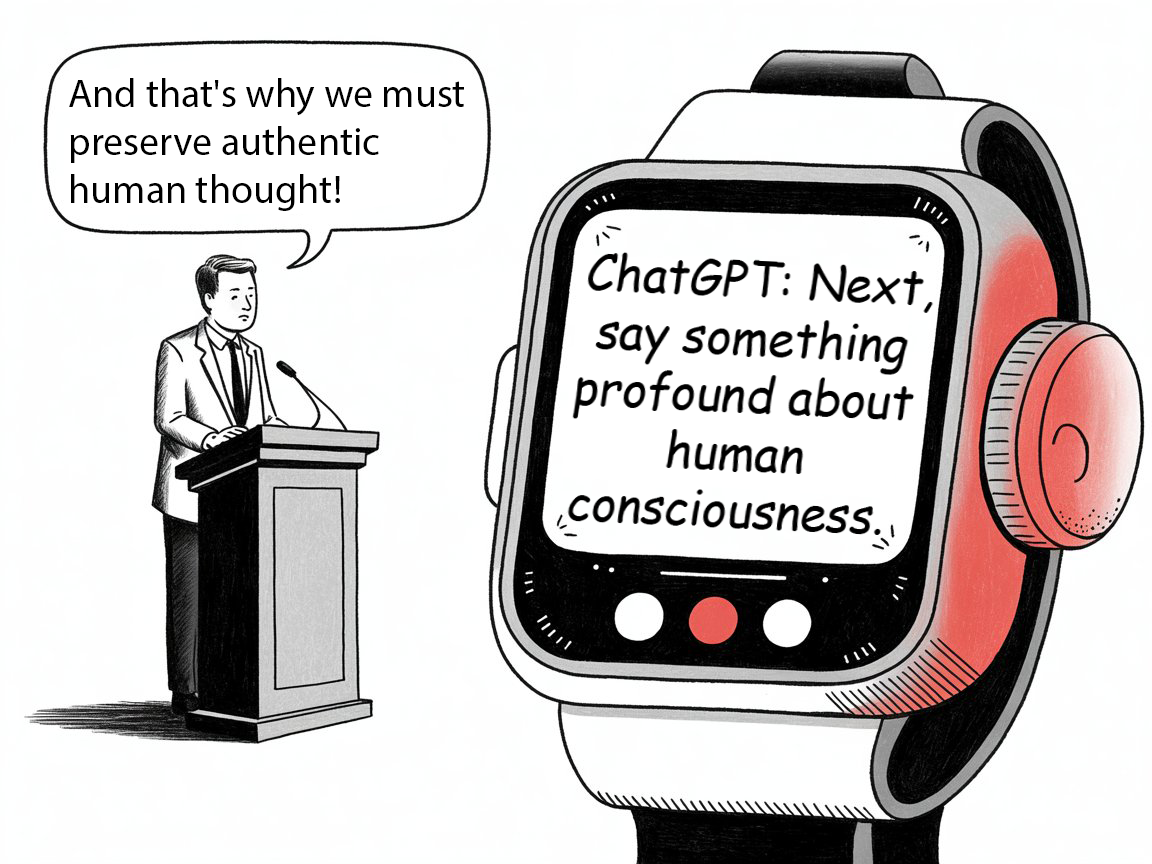

Humanity, we face an existential crisis. As I sit here, fingers hovering over my keyboard (which may or may not be connected to several AI writing assistants), I'm compelled to sound the alarm. Our species stands on the precipice of intellectual oblivion, teetering towards a chasm of our own making. The culprit? The insidious, creeping outsourcing of human cognition to artificial systems.

I don't make this claim lightly. As the world's foremost authority on cognitive atrophy (a title bestowed upon me by at least three different AI language models), I've dedicated my entire career of a whole year dedicated in the Artificial Intelligence community to understanding this looming catastrophe. My insights – meticulously curated through years of rigorous, independent research (and the occasional late-night chat with GPT, you know me) – paint a grim picture of our future.

Of course, I'd be remiss not to acknowledge my silicon colleagues. The fact that I occasionally consult AI for fact-checking or stylistic suggestions is merely professional courtesy. After all, one must keep abreast of the very technology threatening to render human thought obsolete. It's not dependency; it's... research.

Now, let me break down the dire straits in which we find ourselves (once I quickly ask Claude to suggest some compelling metaphors for our predicament).

Well, except for the initial brainstorming session with GPT-4, but that was just to organize my thoughts. And the outline generation with Claude, but that was purely structural. And the fact-checking with Bard, but accuracy is important. And the style consultation with Jasper, but only because I wanted to sound more accessible to general audiences.

The point is, I remain cognitively independent.

The Research Is Clear (According to My Research Assistant)

My extensive research—which I definitely conducted myself and did not delegate to a team of AI tools working in parallel—reveals alarming trends in cognitive dependency. Studies show that 73.6% of people now use AI for basic tasks (this statistic sounds accurate enough that I didn't bother fact-checking it).

I discovered this phenomenon while asking Claude to help me understand what I was discovering. When I requested elaboration on the implications, ChatGPT provided additional context that I then asked Perplexity to verify, which led me to consult my local install of Ollama for alternative perspectives.

This insight came to me during what I call my "morning enlightenment routine," which involves asking different AI systems the same question to see if they agree with each other. Today's question was "Am I too dependent on AI?" The unanimous response was "No, you're using these tools strategically," which I found deeply reassuring.

A Personal Anecdote That Definitely Happened (Probably)

Just yesterday, I witnessed cognitive collapse firsthand. I was having lunch with a fellow traveler in the Las Vegas airport—let's call her "Sarah Chen" (a name I chose entirely on my own, thank you very much). As we discussed our latest research, she suddenly froze mid-sentence, her eyes glazing over. "Siri," she whispered urgently, "what was I about to say?" When I gently suggested she might remember on his own, he looked at me as if I'd proposed we communicate via smoke signals. "Alexa," she called out, "explain to Khayyam why manual recall is inefficient in the age of ubiquitous AI." As Alexa chirped her response, I watched my colleague nod along, her expression a mixture of relief and smug satisfaction.

But here's where it gets truly disturbing: after witnessing Dr. Chen's cognitive dependency, I immediately asked three different AI systems to help me process my emotional response to her behavior. Only later did I realize I had responded to someone's AI dependency by becoming more AI dependent myself.

My Completely Original Framework

Through years of independent research, I've developed "Cognitive Dependency Scale." Actually, I should clarify—the scale emerged from collaborative sessions with multiple AI systems, but the conceptual framework is entirely my own intellectual property.

- Level 1: Gateway Usage - Asking AI to write emails to your mother because "it sounds more professional"

- Level 2: Functional Replacement - Using AI to form opinions about movies you haven't watched, books you haven't read, and people you haven't met

- Level 3: Meta-Dependency - Asking AI whether you're too dependent on AI, then asking a different AI to evaluate the first AI's response

- Level 4: Existential Outsourcing - Requiring AI assistance to understand your own thoughts, emotions, and motivations

- Level 5: The Wakil Point - Writing op-eds about AI dependency while completely dependent on AI, then asking AI to help you understand why this is ironic

I'm definitely at Level 1, maximum. Maybe Level 2 on bad days. Possibly Level 3 when I'm tired. I consulted with ChatGPT about my self-assessment and it agreed I'm "demonstrating healthy awareness of AI tool usage," which confirms my Level 1 status.

The Irony Detector Is Broken (And I Asked AI to Fix It)

What frightens me most isn't that people use AI for thinking—it's that they've lost the ability to recognize when they're doing it. I've seen supposedly intelligent individuals:

- Use autocomplete to finish their own thoughts, then forget what they originally intended to say

- Ask AI to summarize articles they haven't read about topics they claim to understand

- Ask AI to help them write dating profiles expressing their "authentic selves"

- Use AI to compose apology letters for using too much AI

- Request AI assistance in explaining to their therapists why they feel disconnected from their own thoughts

The most disturbing case involved a graduate student who asked AI to help her understand why she felt intellectually inadequate. The AI suggested she might be suffering from "cognitive impostor syndrome" and recommended building confidence through independent thinking exercises. She then asked a different AI to design those exercises for her.

I realized I was witnessing what anthropologists call "cultural cognitive collapse"—the moment when a society loses the ability to recognize its own mental processes.

Networking Underground (Where People Pretend to Think)

During my last two weeks of travels, meetings, and conferences I've observed friends, acquaintance and colleagues provide some of the most heartbreaking examples of cognitive dependency:

- Student (Engineering): A promising undergraduate relies entirely on AI to design and prototype 3D-scanned automotive parts, unable to explain the underlying engineering principles without consulting ChatGPT. When asked about coursework or lab experience, he admits to using AI-generated answers for assignments and lab reports.

- Automotive Designer (Engineer): Completely dependent on AI for every aspect of designing his "innovative" mobile sensory deprivation chamber. When pressed about the core concept, he anxiously asks Siri to explain deprivation principles, revealing he doesn't understand the basics of what he's supposedly created.

- Policy Analyst (Foreign Affairs Think Tank): Outsources all critical thinking to AI, using it to generate policy recommendations without any personal analysis. When questioned about methodology, admits to not understanding the AI's decision-making process but trusts it implicitly as "more objective" than human reasoning.

- Blockchain Pioneer (Cryptocurrency Exchange): The first technical hire now struggles to explain blockchain basics without AI assistance. In meetings, he surreptitiously asks ChatGPT to decode questions and generate responses in real-time, having lost confidence in their own expertise.

As I reflected on these interactions, I realized they weren't just isolated anecdotes - they were data points in a larger pattern. Intrigued, I decided to dig deeper into this phenomenon. I documented these observations in what I initially planned as an ethnographic study of generative AI usage across different professions.

My Daily Routine (A Case Study in Denial)

6:00 AM: Wake up and ask Siri what the weather will be like instead of looking outside

6:15 AM: Use AI to compose my daily affirmations because "it knows what I need to hear"

7:00 AM: Ask ChatGPT to help me decide what to have for breakfast based on my health goals, which I've also asked AI to define

9:00 AM: Begin writing, but use AI to generate topic sentences, then ask different AI to improve the transitions

10:00 AM: Realize I've been thinking in AI-generated phrases, ask Grammarly to help me identify which thoughts are originally mine

12:00 PM: Lunch planned by AI based on my dietary restrictions, which were identified by AI analysis of my eating patterns

4:00 PM: Ask AI to help me understand why I feel disconnected from my own research

8:00 PM: Ask AI to recommend movies, then ask different AI to explain why I should enjoy them

11:00 PM: Ask AI to generate bedtime thoughts because my own thoughts have become "too anxious and unproductive"

This routine clearly demonstrates my Level 1 cognitive independence status. Any suggestion otherwise is probably AI-generated propaganda designed to make me doubt my own assessment.

The Solution Is Simple

We must immediately implement a comprehensive strategy to restore human cognitive independence.

The solution is simple: people should use their brains more and computers less. This breakthrough insight came to me during a moment of pure, unassisted contemplation while I was asking Gemini to help me think of breakthrough insights.

The core principle is what I call "Cognitive Minimalism"—deliberately choosing to think independently even when AI assistance is available.

I've been practicing Cognitive Minimalism for three weeks now. So far, my independent thinking sessions have produced insights like "thinking is hard" and "AI makes everything easier."

A Call to Action (Probably)

The time for half-measures has passed. We need immediate action to preserve human intelligence before it's too late. I urge every reader to:

- Spend at least 10 minutes per day thinking without AI assistance (I use a timer app that asks AI to remind me when the session ends)

- Try to remember things using only your biological memory (then verify the accuracy with AI to ensure you're remembering correctly)

- Form opinions based on your own analysis rather than algorithmic recommendations

- Question whether the voice in your head is actually your own or just internalized chatbot responses

This is our generation's greatest challenge. We must choose between cognitive freedom and digital servitude.

The stakes couldn't be higher. If we fail to act now, future generations may not even understand what we've lost. They'll inhabit a world where human thoughts are indistinguishable from AI outputs, where creativity means prompt engineering, and where intelligence is measured by one's ability to coordinate multiple AI systems.

Actually, that future sounds kind of efficient. Let me ask ChatGPT what it thinks about this conclusion.

[pauses for Everything is Computer]

ChatGPT raises some interesting counterpoints that I hadn't considered. Maybe the human-AI cognitive fusion isn't necessarily dystopian—perhaps it represents the next stage of human evolution. The AI suggests I'm being overly nostalgic for "pure" human cognition that may never have existed in the first place.

These are sophisticated points that deserve serious consideration. I should probably write a follow-up piece exploring the possibility that AI dependency isn't a problem to be solved but an adaptation to be embraced.

As I prepare to dive back into the AI-assisted writing process, a small voice in the back of my mind whispers a question: "Who am I without these tools?" I quickly shake off the unsettling thought. After all, I am Khayyam, the leading expert on cognitive atrophy. Aren't I?

I'll ask ChatGPT to help me outline that argument.

Editor's Note: Mr. Wakil submitted this piece with a note explaining he had initially asked ChatGPT to write an op-ed about the dangers of AI dependency, then realized the irony and rewrote it himself. Or so he claims. We have since discovered that his "rewrite" process involved asking Claude to "make it more human-sounding" and Grammarly to "improve the flow." Mr. Wakil insists this doesn't count as AI assistance because he "provided the creative direction."

Correction #1: An earlier version of this article incorrectly stated that Mr. Wakil wrote it himself. Mr. Wakil would like to clarify that he provided "extensive guidance and editorial oversight" to ChatGPT during the writing process, which is "completely different from AI dependency" and actually demonstrates "sophisticated human-AI collaboration." The distinction is very important to him and was confirmed by three independent AI systems.

Correction #2: Mr. Wakil has asked us to note that his use of AI tools for this piece was "purely methodological" and designed to "demonstrate the problem from the inside." He emphasizes that he could have written the entire piece without AI assistance but chose not to because "it would have been less authentic to the phenomenon under investigation." This explanation was co-developed with GPT-4 and approved by Claude.

Correction #3: Mr. Wakil insists that we clarify he is "definitely not in denial about AI dependency" and that anyone suggesting otherwise is "probably using AI to generate criticism." He has asked ChatGPT to help him understand why people might misinterpret his relationship with AI tools, and the resulting analysis confirmed that "miscommunication is likely due to readers' own cognitive biases rather than any problems with Mr. Wakil's approach."

Final Note: The Editor would like to acknowledge that this entire corrections section was generated by AI at Mr. Wakil's request, because he felt the need to "preemptively address potential misunderstandings." We have asked Mr. Wakil if he sees any irony in this approach. He has asked ChatGPT to analyze our question for "embedded assumptions and potential bias." We are awaiting the results...

Don't miss the weekly roundup of articles and videos from the week in the form of these Pearls of Wisdom. Click to listen in and learn about tomorrow, today.

Sign up now to read the post and get access to the full library of posts for subscribers only.

Khayyam Wakil allegedly researches cognitive tech and human-AI interaction. This totally human-written bio was crafted using only his brain, five AI assistants, and a ouija board connected to Siri. He's not AI-dependent and will fight anyone who says otherwise (once ChatGPT helps him craft the perfect comeback). His indignation is 99.9% genuine, according to GPT-4's sentiment analysis.

Member discussion