"At Apple, we believe privacy is a fundamental human right... We've proved that great experiences don't have to come at the expense of privacy and security."

Key Concepts:

- Companion AI fundamentally bypasses encryption by operating at the perception layer

- This represents a deliberate evolutionary shift in surveillance architecture

- The implications extend beyond individual privacy to social and democratic functions

- Collective acceptance of these systems creates responsibility for their normalization

- The implications extend beyond individual privacy to social and democratic functions

- This represents a deliberate evolutionary shift in surveillance architecture

The Perception Layer Breach

In the quiet halls of consumer technology, a revolution has occurred that few have recognized: the death of digital privacy as we've known it. This isn't hyperbole—it's the inevitable conclusion of a careful technical analysis of what major technology companies now proudly call "AI companions."

The mechanisms that once protected our digital communications have not been defeated through cryptographic breakthroughs or legislative backdoors. Instead, they've been rendered irrelevant through a more elegant approach: moving the surveillance architecture to the perception layer—the interface between human and machine where information is displayed, entered, and interpreted.

This shift represents the most profound transformation in digital surveillance history—one achieved not through confrontation but through convenience. What encryption advocates couldn't defeat, corporations simply sidestepped, repackaging surveillance as innovation. We've welcomed these systems into our most intimate moments without recognizing their fundamental nature. Understanding this transformation matters beyond technical circles; it concerns anyone who values the boundaries between personal thought and corporate observation, anyone who recognizes that human autonomy depends on spaces invisible to algorithmic analysis. The architecture we accept today shapes the freedom we'll possess tomorrow.

The Evolutionary Sleight-of-Hand

To appreciate the magnitude of this transformation, we must recognize its strategic brilliance. The companies behind these systems didn't simply introduce surveillance architectures overnight—they executed a multi-phase plan that carefully managed public perception at each step.

It began with gunfire in San Bernardino, 2015. In the aftermath of a mass shooting that left 14 dead, the FBI demanded Apple unlock a terrorist's iPhone. Apple's refusal turned a technical capability question into a public showdown, with Tim Cook casting his trillion-dollar corporation as the last defense against government overreach. This collision between law enforcement and encryption advocates appeared to end in stalemate, with Apple seemingly victorious.

Yet something curious happened in the aftermath. Intelligence agencies, once vocal about the "going dark" problem posed by encryption, gradually fell silent. This silence, in retrospect, was deafening.

While the public debate about encryption backdoors raged, a more subtle approach was taking shape. Rather than attacking encryption directly, surveillance capabilities would simply move to a layer where encryption becomes irrelevant—the perception layer where humans interact with their devices.

The first indication came with the introduction of client-side scanning technologies—systems that examine content on your device before it's encrypted. Apple's CSAM (Child Sexual Abuse Material) detection system, announced in 2021, represented a watershed moment: the normalization of pervasive client-side monitoring. Though narrowly targeted at illegal imagery, the architectural implications were profound—a scanning infrastructure inside the device itself.

The final phase arrived with minimal fanfare: the introduction of "AI companions" that, in Microsoft AI’s CEO Mustafa Suleyman’s words, "see what you see, hear what you hear, know what you know." These systems—Apple Intelligence, Windows Copilot, Google Gemini—were marketed purely as helpful assistants, with minimal discussion of their surveillance implications.

This three-phase evolution—from confrontation to limited scanning to omniscient companions—represents one of the most successful misdirections in technological history. The public fixated on traditional encryption backdoors while surveillance simply moved to a layer where encryption is fundamentally irrelevant.

The Technical Reality

To understand why companion AI represents such a profound shift, we must examine its technical architecture:

Traditional security models rely on boundaries—encrypted channels for communication, secure containers for storage, access controls for systems. These boundaries assume that data can be protected by controlling its movement between defined spaces.

Companion AI shatters this assumption by operating above all these boundaries, at the perception layer where humans and computers interact. This provides access to:

- Display buffer data: Everything rendered on your screen

- Input event streams: Every keystroke, touch, or voice command

- Sensor data: Camera feeds, microphone input, location

- Contextual awareness: Understanding how these elements relate

When Microsoft AI's CEO describes an AI that can "see what you see," this isn't metaphorical—it's architectural. The system has continuous access to the display buffer, capturing everything shown on screen regardless of which application generated it or whether that data was previously encrypted.

When Apple promotes "on-device processing" for privacy, they're engaging in a form of technical misdirection. While processing may indeed occur locally, the architecture still permits selective reporting based on pattern matching. Your device becomes capable of monitoring everything while reporting only what it's instructed to find—a far more efficient surveillance model than bulk collection.

The intelligence community's silence on encryption in recent years takes on new meaning in this context. Why fight a contentious battle over encryption backdoors when you can simply move surveillance to a layer where encryption is irrelevant?

The Encryption Paradox

This creates an Encryption Paradox: a situation where communications remain technically encrypted while being functionally transparent.

Consider a message sent through Signal, widely considered the gold standard for encrypted messaging:

- You type your message on a device with companion AI

- The AI observes this input before encryption occurs

- The message is encrypted and transmitted securely

- It arrives and is decrypted on your recipient's device

- If they also use companion AI, it observes the decrypted message

At no point was the encryption broken, yet the content was fully visible to the AI systems at both endpoints. The encryption worked perfectly while providing no practical privacy.

This is equivalent to having a conversation in an unbreakable soundproof room while wearing always-on microphones. The room's soundproofing remains technically flawless while being functionally useless.

For decades, we've conceptualized digital privacy around the protection of data in transit and at rest. Companion AI reveals the fatal flaw in this model: it ignores data at the perception layer—the crucial interface where humans actually interact with information.

The Open Source Illusion

Some will object that open-source implementations of AI companions solve these problems. This represents a fundamental misunderstanding of the architectural issues at play.

Open source provides transparency into code, allowing users to verify what the software is programmed to do, and potentially modify it. These are valuable properties, but they don't address the core architectural problem: any system designed to operate at the perception layer, regardless of its source code availability, will have comprehensive access to user activity.

Consider an open-source AI companion running locally:

- It still requires access to the display buffer to "see what you see"

- It still needs access to input methods to understand your actions

- It still needs sensor access to provide environmental awareness

- It still maintains the same fundamental surveillance capabilities

The problem isn't in the implementation details but in the core function. A system cannot simultaneously be an effective AI companion (as currently conceived) and respect traditional privacy boundaries. The two goals are architecturally incompatible.

This creates a situation where even well-intentioned, privacy-focused companion AI systems inevitably reproduce the same fundamental privacy breaches—a case where architecture trumps intention.

The Collective Liability of Acceptance

While architectural realities create the foundation for this privacy breach, perhaps equally troubling is how willingly—even enthusiastically—these systems have been embraced by the public. The reflexive "I have nothing to hide" response to privacy concerns has become so commonplace that it deserves careful examination, as it represents a profound misunderstanding of what's actually at stake.

This casual dismissal of privacy concerns creates a collective liability problem, where individual acceptance generates consequences that extend far beyond personal choice:

First, it profoundly distorts the essential nature of privacy, reducing it to concealment rather than recognizing it as boundary maintenance. Privacy doesn't exist primarily to shield wrongdoing—it serves as the fundamental mechanism through which we maintain contextual integrity of our information across different spheres of life. It creates the necessary spaces where intellectual exploration occurs without premature judgment, where identity forms beyond algorithmic prediction, and where political dissent can develop before facing institutional resistance. These functions represent not luxuries but necessities for meaningful human autonomy in digital environments.

Second, it ignores the collective impact of individual surrender. Each person who accepts omnipresent monitoring normalizes it for others, creating pressure on holdouts and establishing surveillance as the default condition. Your decision to use a device with companion AI doesn't just impact your privacy—it helps establish a world where surveillance is expected and unavoidable.

Third, it displays a dangerous present-time bias, assuming that what's acceptable to reveal today will remain benign tomorrow. History repeatedly demonstrates that what needs "hiding" changes radically with political shifts. The comprehensive data collection enabled by companion AI creates a permanent historical vulnerability that cannot be retroactively mitigated.

Fourth, it ignores the empirically verified behavioral modifications induced by awareness of observation. Studies across multiple domains consistently demonstrate that surveillance fundamentally alters human conduct—suppressing intellectual exploration, narrowing conversational boundaries, and enforcing unconscious conformity to perceived norms. These modifications occur at a neurological level that bypasses conscious rationalization; subjects demonstrably self-censor despite explicit beliefs in their own transparency. The "nothing to hide" position thus reveals itself as psychologically naive—surveillance doesn't merely expose existing behavior but actively reshapes it, creating a recursive system where freedom diminishes proportionally to observation.

Perhaps most importantly, it ignores the fundamental power asymmetry created: you become transparent while the systems observing you remain opaque. This asymmetry enables predictive manipulation of behavior and erodes the foundation of individual agency.

Those who claim they have "nothing to hide" are not merely making a personal choice—they're actively participating in the dismantling of privacy as a social value and creating liabilities that extend far beyond themselves.

The Masterful Marketing

The most remarkable aspect of this privacy transformation is how it has been marketed as empowerment rather than intrusion. The linguistic framing is brilliant in its misdirection:

- "AI companion" (not "continuous monitoring system")

- "Helps you remember" (not "records everything you do")

- "On-device processing" (technically true, functionally irrelevant)

- "Privacy-preserving" (only in the narrowest technical sense)

This represents perhaps the most sophisticated social engineering campaign in technological history—convincing users not merely to accept surveillance but to pay premium prices for the privilege.

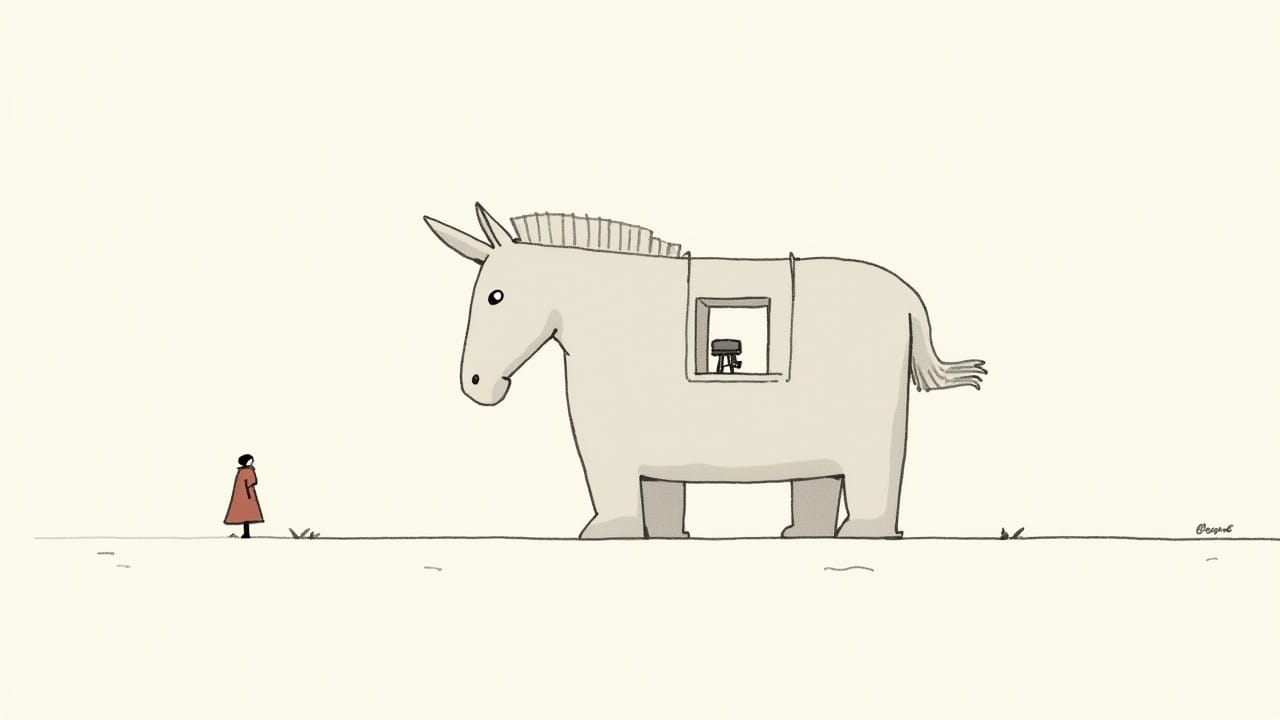

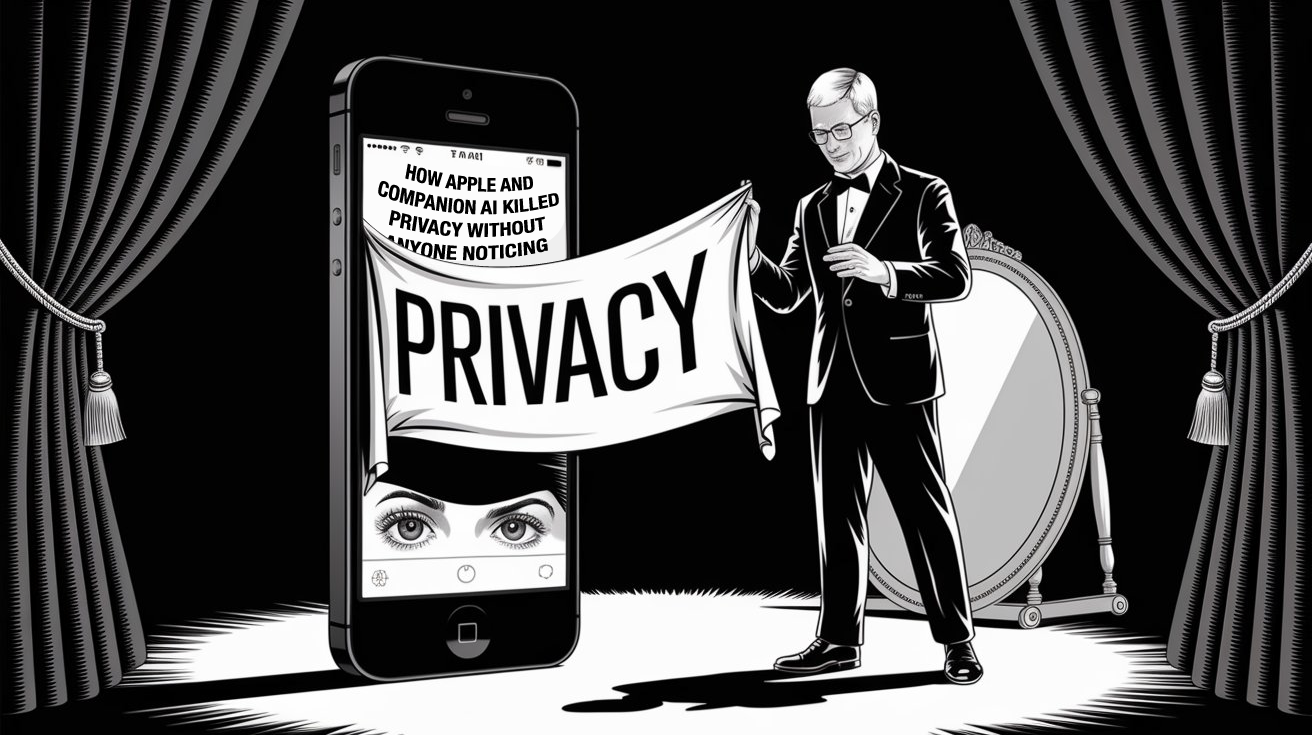

APPLE INTELLIGENCE: THE TROJAN HORSE OF PRIVACY

No company exemplifies this masterful marketing strategy more effectively than Apple with its "Apple Intelligence" initiative. This is particularly significant because Apple has spent the past decade positioning itself as the privacy-conscious alternative in the technology ecosystem—a reputation that makes it the perfect vector for normalizing perception layer monitoring.

Apple's historic confrontation with the FBI over the San Bernardino iPhone created a powerful privacy narrative that continues to shield the company from scrutiny. The company's longstanding "Privacy. That's iPhone." campaign established a public perception that Apple prioritizes user privacy above all else. This carefully crafted reputation provides the perfect cover for introducing fundamentally surveillance-oriented architecture.

What makes Apple Intelligence particularly effective as a privacy-erosion vector is its integration into a cohesive ecosystem with massive market penetration. Consider the distribution mechanics:

- Apple Intelligence is being pushed to over a billion iOS devices through routine software updates

- It's presented as a key selling point for new iPhone models, leveraging hardware upsell cycles

- It's integrated into macOS, creating cross-device normalization of perception layer monitoring

- It's bundled with genuinely useful features, creating a functionality-for-privacy trade

This deployment strategy ensures that perception layer monitoring will rapidly become normalized across Apple's entire ecosystem. The company's premium positioning means these features will likely be emulated by competitors, accelerating industry-wide adoption.

Most cunningly, Apple has maintained its privacy-focused marketing throughout this transformation. The company emphasizes "on-device processing" and "Private Cloud Compute" while implementing architecture that fundamentally negates traditional privacy boundaries. This creates a profound cognitive dissonance where consumers believe they're choosing privacy while adopting its antithesis.

The approach cleverly exploits a fundamental asymmetry in human cognition: immediate, tangible benefits (like search functionality or image creation) are far more psychologically salient than abstract, long-term costs (like privacy erosion or power concentration).

By emphasizing immediate utility while obscuring structural implications, technology companies—with Apple leading the charge—have engineered a situation where consumers actively demand devices that fundamentally compromise their privacy. This isn't merely acceptance of surveillance—it's enthusiasm for it.

The Asymmetric Awareness Problem

This widespread adoption highlights an asymmetric awareness problem: the security of private communication now depends on both endpoints being secure. This creates a situation where:

- Privacy-conscious users must assess the device security of everyone they communicate with

- The majority using compromised devices create a "network effect" of surveillance

- Privacy-preserving technologies become increasingly isolated and impractical

The Apple ecosystem perfectly illustrates this problem. Consider a scenario where you communicate with iPhone users:

When an iPhone 16 user with Apple Intelligence enabled messages you through Signal or WhatsApp—applications theoretically providing end-to-end encryption—their device's perception layer monitoring renders this encryption functionally moot. Apple Intelligence can observe what they type before encryption occurs and what they read after decryption. The encrypted channel remains technically secure while the content is fully exposed at both endpoints.

What makes this particularly insidious is that users typically don't understand or disclose what kind of device they're using, what features are enabled, or what implications this has for communication security. There is no visible indicator in Signal or WhatsApp that warns "This user's device has perception layer monitoring enabled."

As Apple Intelligence becomes standard on hundreds of millions of devices through software updates and hardware refresh cycles, the practical possibility of private digital communication erodes—not through legal prohibition but through technological normalization. Even if you personally reject these systems, their widespread adoption means your communications are increasingly likely to be exposed through the devices of others.

This creates a tragic scenario where even privacy-conscious individuals find themselves forced to choose between digital isolation and exposure. The network effects of communication technologies mean that security becomes defined by the least secure participant—creating a powerful downward pressure on privacy standards driven largely by Apple's massive market influence.

Technical Mitigation and Their Limitations

For those concerned about these developments, technical mitigations do exist, though each faces significant limitations:

Linux-based computing systems provide alternatives to mainstream operating systems with companion AI. However, they face compatibility challenges with common software and services, creating practical barriers to adoption.

De-Googled Android devices offer mobile options with reduced surveillance capabilities. Yet these increasingly struggle with application compatibility and often sacrifice functionality.

Air-gapped systems—physically isolated from networks—remain the gold standard for truly sensitive operations. However, their isolation makes them impractical for everyday communication.

The fundamental challenge with all these mitigations is that they work against powerful network effects. As companion AI becomes the expected norm on everyday devices, systems designed to preserve privacy face a compounding disadvantage—they work with fewer services, receive less development support, and struggle to connect with mainstream technologies.

This creates a downward spiral where the tools that protect privacy become increasingly impractical for average users, strengthening the position of surveillance-enabled platforms." The technical possibility of privacy may persist, but its practical accessibility diminishes.

The Broader Implications

The implications of this architectural shift extend far beyond individual privacy concerns to fundamental questions about human autonomy and social function:

Cognitive autonomy requires private space for thought development. When thinking itself becomes potentially observable—through drafts, searches, or content consumption—intellectual exploration is inevitably constrained.

Democratic function depends on the ability of citizens to communicate without observation by powerful entities. Democracy withers under the watchful eye of surveillance, as the free exchange of ideas—essential to both civic discourse and breakthrough innovation—requires spaces shielded from premature evaluation and control.

Power concentration accelerates when surveillance capabilities are asymmetrically distributed. The comprehensive awareness enabled by companion AI creates unprecedented power imbalances between technology providers and users.

These considerations reveal why privacy is not merely an individual preference but a structural necessity for functioning societies. The architecture of companion AI poses challenges not just to personal secrets but to fundamental social processes.

The Responsibility of Awareness

Every morning, millions of people reach for their phones—devices now engineered to watch them back. This isn't science fiction or conspiracy theory; it's the architectural reality we've documented throughout this analysis. And this reality demands a question: Once you understand what these systems actually do, can you continue using them without accepting responsibility for what they represent?

Understanding these implications creates a responsibility that cannot be easily dismissed. When you activate companion AI on your device, you're casting a vote for a technological future that normalizes surveillance architecture and fundamentally alters the power balance between individuals and institutions.

This isn't merely about personal risk calculus but about collective responsibility for the technological environment we create. Each individual who embraces companion AI helps normalize a surveillance architecture that fundamentally alters power relationships in society.

The question becomes not just what risks we personally accept, but what world we collectively create through our technological choices. Every device we purchase, every feature we activate, represents a vote for a particular technological future. And those votes, aggregated across billions of users, determine the architecture of our shared digital environment.

What We Build is What We Become

We stand at a pivotal moment in the evolution of digital technology. The introduction of companion AI represents not merely a new feature set but a fundamental architectural shift in how humans interact with machines.

This shift has occurred not through confrontation but through convenience—surveillance capabilities have been repackaged as helpful features, accepted and even embraced by a public largely unaware of their implications.

The role of Apple in driving this transformation cannot be overstated. With its dominant market position, premium brand, and privacy-focused reputation, Apple has become the perfect vector for normalizing perception layer monitoring across society. When the company that built its brand on privacy introduces architecture that fundamentally undermines it, the cognitive dissonance creates a powerful blindspot.

Every iPhone 16 user who adopts Apple Intelligence becomes an unwitting privacy liability to everyone they communicate with. Each Mac user who enables these features contributes to the network effect that makes privacy increasingly impractical. The scale of Apple's ecosystem means that its architectural choices ripple throughout society, reshaping expectations and norms beyond its immediate user base.

The resulting transformation is not merely technical but social: the effective end of the expectation of private digital communication, accomplished not through legal prohibition but through architectural design disguised as innovation.

Understanding this reality creates both a burden and an opportunity. The burden is the awareness that our technological choices have profound implications beyond our individual experiences. The opportunity is the possibility of making these choices consciously, with full appreciation of their collective impact.

The future of privacy will not be determined by technical capabilities alone, but by the values we express through our technological choices. As companion AI becomes increasingly embedded in our digital infrastructure, these choices will shape not just what our technology can do, but what kind of society it creates.

What we build is what we become. The question is whether we're building with awareness of what we're becoming—and whether we're willing to resist the convenience of surveillance, even when it comes wrapped in the sleek packaging of our favorite technology brands.

Courtesy of your friendly neighborhood,

🌶️ Khayyam

Don't miss the weekly roundup of articles and videos from the week in the form of these Pearls of Wisdom. Click to listen in and learn about tomorrow, today.

Sign up now to read the post and get access to the full library of posts for subscribers only.

Khayyam Wakil specializes in pioneering technology and the architectural analysis of systems of intelligence. This article represents the culmination of experiences, research, and applications in perception layer monitoring technologies.

Member discussion