"The reasonable man adapts himself to the world; the unreasonable one persists in trying to adapt the world to himself. Therefore all progress depends on the unreasonable man."

— According to George Bernard Shaw, who I doubt ever imagined his words about unreasonable men would someday validate someone who calls himself an AI cowboy

They Theorize. We Build.

When you're knee-deep in building the future, validation starts to feel like old news you forgot to read...

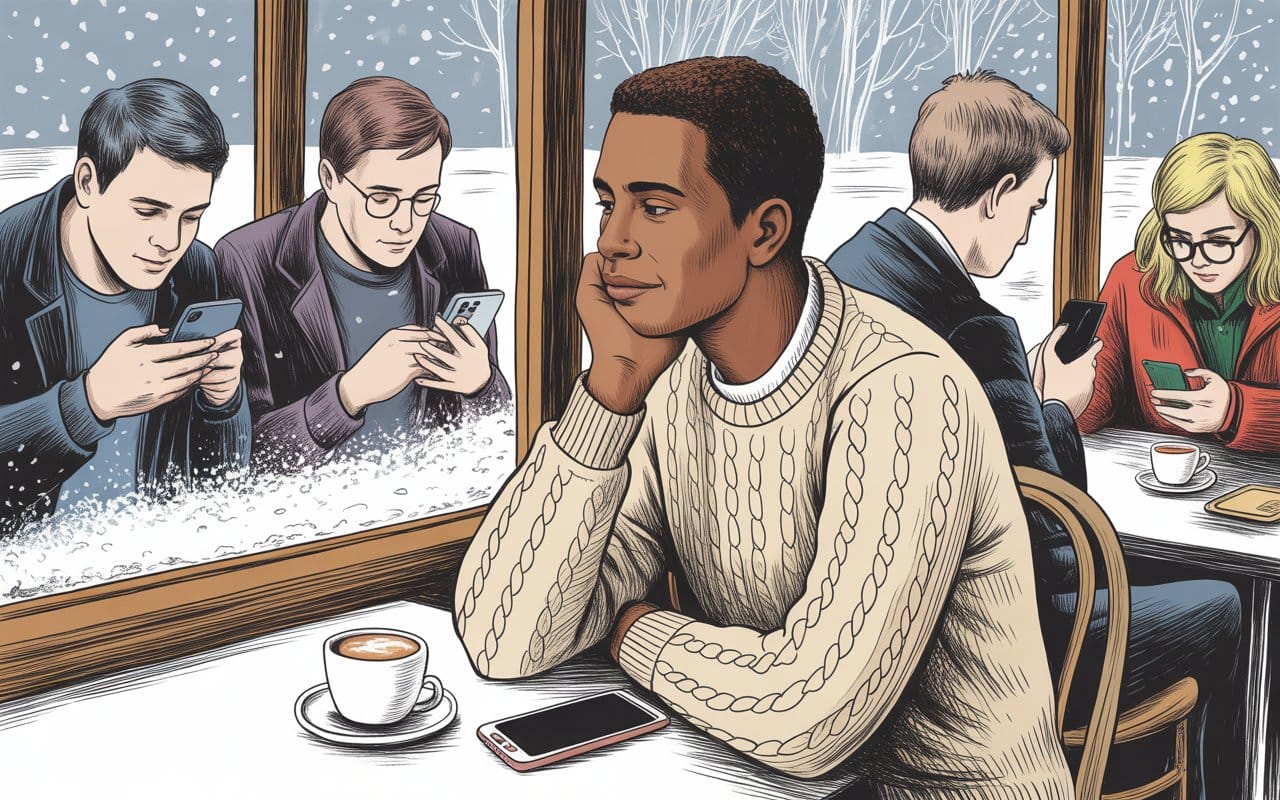

It's 6:47 PM on December 3rd, 2025.

My phone's been vibrating nonstop for three hours.

"Did you see Google's paper?"

"They're citing YOUR research"

"You called this out way back when!”

I'm in Calgary. Watching the snow fall. I moved here to start something. The last place I ever thought I'd end up, honestly. After months of preparation, research, isolation, and validation we were finally gearing up to announce the launch of CacheCow. Next week, in fact, CacheCow becomes real to anyone who cares to look.

But tonight people keep sending me this PDF like it's breaking news.

"Nested Learning: The Illusion of Deep Learning Architecture"

Google Research. 50 pages. Published December 2nd.

I open it. Start skimming through it.

- Section 1.1: Multi-timescale processing. Delta waves. Gamma oscillations. The same neuroscience I cited in October.

- Section 4: Optimization as associative memory. The framework from my thesis.

- Section 6: Transformers can't learn continually, can't consolidate memory, can't adapt without catastrophic forgetting.

I actually laughed out loud.

How... cute.

They're just now catching up to neuroscience from the 1960s.

We've been calling our approach "Pancakes and Syrup" for months now. I didn't jump up cheering or scream vindication. I just sat there watching snowflakes gather against the window—a window, I should add, covered in dry-erase marker scribbles that would make John Nash proud. Like nothing unusual had happened at all.

Because deep down, I already knew I was right.

Not because I'm smart. Because I've been building the solution for years while everyone else was still arguing about the problem.

The Thing About Being Ahead

There's a specific feeling that comes with being months—no, make that years—ahead of the conversation.

It's not satisfaction. It's not vindication.

It's this strange, unsettling calm.

When your phone explodes with "Google just validated your thesis!" you don't celebrate. You just think: Yeah... I mean, of course they did.

And maybe, quietly: Where were you when I needed you to believe?

October 31st, I published "Attention Is All You Had: The 10% Delusion."

Core thesis: We built trillion-dollar AI on text transcripts—10% of human communication. Threw away the embodied, temporal, multi-sensory 90%. And then had the nerve to wonder why our models can't actually learn like we do.

Response was... polite.

A few retweets. "Interesting perspective" comments. One VC asked if I was okay. Another literally said:

"Why fix what prints money?"

"Why fix what prints money?" (I'm still trying to wrap my head around that one.)

Some AI researchers in my mentions: "You're fundamentally misunderstanding how transformers work. More scaling will solve this problem."

I wasn't misunderstanding a damn thing. I was about 8 years ahead of them.

Well, more like 3 years of practical work and then another 5 years of falling down rabbit holes, emerging occasionally with something that might be genius or might be madness.

And I didn't argue. I didn't defend. I didn't explain.

I just… kept going.

What I Was Actually Doing

While people politely dismissed my essay, I was building.

CacheCow. Livestock monitoring tags that implement—well, I can't tell you exactly what they implement. Not yet.

The devices predict livestock disease outbreaks 24-48 hours early. They learn from actual outcomes in physical reality—whether cows get sick or stay healthy. They maintain state across power cycles. They coordinate across mesh networks.

Google spent 50 pages theorizing about what I've been building for three years.

When that PDF hit my inbox, I didn't flinch.

They were just catching up to what my hands already knew and the architecture explained.

The October Essay Nobody Believed

Let me take you back.

Before the validation. Before the investors started calling.

Let me tell you about October 31st.

I wrote about how we built AI on shadows. Text transcripts. 2D images. Information stripped twice-over from embodied reality.

Computer vision flattens 3D to 2D. Discretizes continuous time into frames. Removes noise. Forces everything into tidy labels.

NLP extracts text. Discards prosody, microexpressions, environmental context, embodiment.

Then we train on this twice-reduced information and act surprised when our models can't handle messy physical reality.

I called current AI "orchid intelligence"—exquisite, impressive, completely dependent on pristine greenhouse conditions. Cleaned data. Labeled taxonomies. Controlled distributions. Careful pruning.Real intelligence? That's a weed. It grows in cracks. Thrives in chaos. Learns fromactually falling down and getting back up.

Human children learn physics by dropping things. They learn by experiencing consequence, experiencing real consequences.

Our AI systems learn by reading cleaned text.

October response: polite indifference. One investor asked if I needed a break.

I didn't need a break. Even had a Kit Kat. I needed people to stop talking so I could build.

The Zoom Call That Mattered

But there was one moment that changed everything.

About two months ago now. October 27th, to be exact.

The guy who literally invented ███. One of the people who literally built the infrastructure the internet runs on.

We show him CacheCow's architecture. The mesh. The multi-timescale processing. The consequence-based learning.

He's quiet for a long moment.

My stomach tightens. Here comes another polite dismissal.

Then:

"This is the only sensible approach I've seen for distributed edge intelligence. I want in."

That night, I actually slept through the night for the first time in weeks.

That's the moment my hands stopped shaking when I pitched.

Not a Google paper. Not academic citations. Not Twitter threads.

Someone who actually built foundational internet infrastructure looking at your work and saying: Yeah. This is how you actually solve this problem.

When Google's paper landed six weeks later, I felt nothing but certainty.

Because I already knew I had the answer.

From someone whose judgment actually matters.

Note the date of the Zoom call and the October 31st essay.

What Google Actually Admitted

Let me translate their paper from academic to English:

They say:

"Nested Learning—multi-level optimization with different update frequencies."

What they actually mean:

"We built transformers wrong. Everything updates at the same rate. This is stupid. We need to fix it."

They say:

"Models exhibit anterograde amnesia."

Translation:

"Transformers can't form new persistent memories. They're architecturally broken for continual learning."

Every single section is basically an admission of failure.

Transformers are computationally shallow. ✓

Single update frequency is neurologically absurd. ✓

No memory consolidation. ✓

I laid all of this out on October 31st.

While people told me I was misunderstanding transformers.

I wasn't misunderstanding anything.I was three months ahead of Google. Google.

The Thing About Building in Silence

There's a particular loneliness to seeing what others don't.

While Silicon Valley argued about whether transformers are sufficient, I was doing something else.

I was busy proving they're not sufficient.

Not with essays. Not with arguments. Not with more theory.

With actual hardware you can touch.

CacheCow implements what we’ve identified as Coordinated Edge Intelligence—multi-timescale processing in $75 devices that run for two years on a battery.

Google needs 50 pages of equations to explain their theory. I need a cow tag and a mesh network to prove mine works.

Concrete beats abstract. Always has.

When their paper landed, people expected me to react. To celebrate. To say "I told you so."

I just shrugged.

What they couldn't see was the weight of those months—the doubt, the funding meetings where investors looked at me like I was speaking Greek, the nights wondering if I was actually wrong.

You don't celebrate when someone finally catches up to what you figured out months ago.

You just think: Yeah... obviously. Glad you're finally getting it.

Validation from Google doesn't really change anything for us.

Because I already validated it the only way that matters—with silicon that is validated by people who build, at global scales.

The Validation That Wasn't

Here's what actually happened when Google's paper landed: nothing changed. The work was already done. The devices already built. The validation I needed comes from silicon that works, not academic papers.

The real story isn't that I was right—it's that being right too early is indistinguishable from being wrong until the market catches up. Google's paper doesn't validate my thesis. My hardware is inching toward it.

Something Is Happening…

Now, we come out of stealth.

CacheCow.

Coordinated Edge Intelligence

Coordinated Edge Intelligence for livestock monitoring.

CacheCow.

Two federal mandates coming down the pipeline. 106 million cattle in the US alone. A $7.95 billion market that's about to become mandatory.

But that's just the story we tell people who need a business case.

The real story is much more interesting.

We proved Coordinated Edge Intelligence works: genuine distributed intelligence with edge devices, multi-timescale processing, consequence-based learning, and continuous state evolution.

Using less computational power than it takes to send a text message.

While OpenAI burns billions. While Google publishes what are essentially apology papers dressed up as breakthrough research. While VCs debate timelines.

We're moving into manufacturing and shipping real products.

Soon, theory becomes practice. Words become products. The abstract becomes concrete.

And I'm not excited. I'm not vindicated. I'm not even satisfied.

I'm terrified. Hopeful. Exhausted. Ready.

I'm already thinking about the next problem.

Because when you’ve found the skeleton key, validation feels like noise, and closed doors don’t matter.

The Conclusion I Don't Need

I was right on October 31st. It validates that I was on the right path three years ago.

Google admitted I was right on December 2nd. Just confirmation of what the silicon already proved. And when we announce CacheCow, people will act surprised.

They'll ask: "How did you know this would work?"

Some people will quietly wonder why they didn't listen sooner.

I'll smile politely.

Not mentioning the maxed-out credit cards, the girlfriend who couldn't handle the uncertainty, the mom who passed away while I was deep in this work—and who somehow, in her final weeks, gave me the inspiration to solving the problem AND the permission I needed to keep going.

Not mentioning how two of the last people I had and trusted stripped away nearly everything—resources, confidence, sense of self during that time. How I went from having what felt like everything to having nothing but this one stubborn idea. How learning to trust again turned out to be harder than solving the technical problems.

Not mentioning the one person who believed in me when I couldn't believe in myself—someone I still worry about disappointing, even though we're sitting on something that might actually change how we approach these problems.

And yes, solve some of my own problems too.

Because chances are, I'm already three months ahead of whatever question they're about to ask.

- Architecture > Scale.

- Silicon > Theory.

- Building > Arguing.

It’s always been this way.

The industry chases trends. I chase truth. The difference matters.

And when you see CacheCow preventing disease outbreaks while costing half what incumbents charge, don't act shocked.

I told you this was coming. And if you've known me this past year, there's not much else I talk about.

People couldn’t listen. No one was listening back then.

But now folks are. And that's enough.

Don't miss the weekly roundup of articles and videos from the week in the form of these Pearls of Wisdom. Click to listen in and learn about tomorrow, today.

Sign up now to read the post and get access to the full library of posts for subscribers only.

About the Author

Khayyam Wakil built his first neural network in high school, mostly to avoid thinking about the endless Saskatchewan winters. Twenty years later, he's still building systems that outlast the people who try to break them.

He designs infrastructure that scales, maps failure modes before they happen, and solves coordination problems that everyone else calls impossible. His breakthrough in distributed edge intelligence emerged from watching grocery store logistics, studying how biological systems actually work, and the particular clarity that comes from growing up somewhere that tests everything.

Khayyam writes about technology and society from the perspective of someone who's seen efficiency gains become human losses, watched promising startups implode from preventable failures, and learned that the most important innovations happen in the margins while everyone else argues about trends.

CacheCow represents three years of building in silence and seven years of thinking through problems nobody else wanted to acknowledge. This essay is 23% AI assistance, 77% stubborn human insight—a ratio he tracks because it matters more than people think.

Contact: sendtoknowware@protonmail.com

"Token Wisdom" - Weekly deep dives into the future of intelligence: https://tokenwisdom.ghost.io

#edgeintelligence #distributedAI #AgTech #buildinginstealth #architectureoverscale #meshnetworks #embodiedAI #siliconvstheory #coordinatededgeintelligence #attentionisallyouhad #longread | 🧮⚡ | #tokenwisdom #thelessyouknow 🌈✨

Member discussion