As machine learning models become increasingly complex, it has become difficult to understand how these models arrive at their predictions. Moreover, when these models go wrong or go rogue, it becomes even more challenging to identify the source of the error. However, recent advancements in machine learning, specifically in deep learning, have allowed us to create models that perform better on complex tasks. This has given rise to the need for explainable AI, a process of translating machine learning model contents into something understandable by humans. This article will explore the importance of explainable AI and what it means to explain a machine learning model.

The Importance of Explainable AI

At the core of the importance of explainable AI is the issue of trust. If we cannot trust a system, it becomes challenging to use it effectively. When it comes to machine learning models, trust is essential because it ensures that the model is making the correct decisions under the correct assumptions. Moreover, it helps us understand what went wrong when the model fails, giving us more control over the model's performance. We can then choose to either improve the model based on that knowledge or not use the model for specific tasks.

Not only that, but when we deploy machine learning models at a large scale, they are used by millions of people, making it even more critical that the predictions being made are in line with our expectations. When we don't understand how these models work on the inside, it becomes challenging to trust them. Explainability helps us gain more control over the model and ensures that it operates as intended.

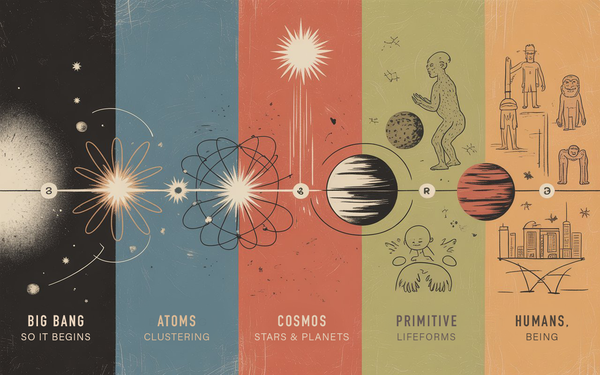

Basic Science and Explainability

Explainability also plays a crucial role in basic science, helping us gain new insights into the tasks themselves. For instance, if we can explain how models like AlphaGo perform so much better at the game Go than humans, we might be able to develop new strategies that humans could execute. Similarly, if we can explain a model trained for cancer diagnostics, we might gain more insight into how cancer grows and spreads within our bodies, leading to new treatments. Therefore, explainable AI can provide us with new perspectives on tasks and help us make strides in research and development.

What It Means to Explain a Machine Learning Model

While we have established the importance of explainable AI, we must also understand what it means to explain a machine learning model. At its core, explainability refers to the ability to understand and interpret how a machine learning model works. It is an essential factor in building trust in the model, ensuring that it works as intended, and avoiding the consequences of its failure. However, achieving explainability can be challenging, particularly in deep learning models that have many layers and parameters.

Moreover, there are different types of explainability, and which one is most appropriate for a particular task depends on the model and its intended use. Some models may require global explanations, which refer to an overall understanding of the model, while others may require local explanations, which focus on specific instances. Furthermore, different methods can be used to achieve explainability, such as feature importance, attention mechanisms, and saliency maps.

Challenges in Achieving Explainable AI

Despite the numerous benefits of explainable AI, there are still significant challenges in achieving it. One of the primary obstacles is the complexity of modern machine learning models. The models used today are so large and complex that it can be challenging to understand how they arrived at their predictions. This issue is exacerbated by the fact that many machine learning models use deep learning techniques, which can make them even more opaque.

Explaining a ML model to people so they understand it is a challenge. Complex models like neural networks and random forests, while accurate, are more difficult to interpret; simpler models (decision trees and linear models) are easier to interpret but are less accurate. Researchers must strike a balance between model performance and explainability.

To address these challenges, researchers have been developing methods for explainable AI that can balance interpretability and model performance. One approach is to use surrogate models that approximate the behavior of a complex model and provide a simplified explanation of how the model makes decisions. Another approach is to use visualizations and feature importance measures to help users understand how the model is weighting different inputs.

In addition to these technical challenges, there are also ethical and social implications of explainable AI. One issue is the potential for bias in the data used to train the model, which can lead to biased decisions that are difficult to detect without transparency and accountability in the model. Another issue is the potential for misuse of explainable AI to justify decisions that are discriminatory or unethical.

Current Approaches to Achieving Explainable AI

Researchers have developed several approaches to achieving explainable AI. One approach is to use simpler models that are easier to understand. For example, decision trees are a type of machine learning model that is highly interpretable. Another approach is to add features to the model that allow for better explanations, such as feature importance scores or partial dependence plots.

Another approach is to develop methods for interpreting the outputs of more complex models. For example, researchers have developed methods for visualizing the decision boundaries of deep neural networks, which can provide insights into how the model is making its predictions. Researchers have also developed methods for using natural language processing to generate explanations for the predictions made by a model.

Researchers have also developed methods for generating "counterfactual explanations." These explanations show how the model's predictions would have changed if certain inputs were different. For example, if a model predicted that a loan applicant would default on a loan, a counterfactual explanation might show how the prediction would have changed if the applicant had a higher income or a longer credit history.

The Importance of Explainability in Real-World Applications

Explainable AI is crucial for many real-world applications. For example, in the healthcare industry, machine learning models are used to diagnose diseases and develop treatment plans. In these applications, it is essential to understand how the model is making its predictions so that doctors can make informed decisions about patient care.

In the financial industry, machine learning models are used to make investment decisions and predict market trends. These models are often used to make decisions that can have a significant impact on people's financial well-being. Therefore, it is essential to understand how the models are making their predictions so that people can make informed decisions about their investments.

In the criminal justice system, machine learning models are used to make decisions about bail, parole, and sentencing. These decisions can have a significant impact on people's lives, and it is essential to ensure that they are made fairly and transparently. Therefore, it is crucial to understand how the models are making their predictions so that the decisions can be reviewed and challenged if necessary.

Token Wisdom

Explainable AI is an essential area of research that has the potential to significantly improve our ability to understand and trust machine learning models. By providing meaningful explanations of how models arrive at their predictions, we can gain new insights into the tasks being performed and make more informed decisions. However, achieving explainable AI is not without its challenges. Researchers must find ways to balance accuracy and explainability,

Interpretability and explainable AI are important area of research that have the potential to increase trust and understanding of machine learning models, while also uncovering new insights and improving model performance. As researchers continue to develop new methods for achieving explainability, it will be important to balance technical and ethical considerations to ensure that these models are both interpretable and trustworthy.

Member discussion