"Progress is impossible without change, and those who cannot change their minds cannot change anything."

— said George Bernard Shaw, who never had to contemplate a world where progress meant teaching machines to replace not just our labor but our judgment, our creativity, and our purpose. His typewriter never learned to write like him, improving with each word he composed, ready to make him obsolete.

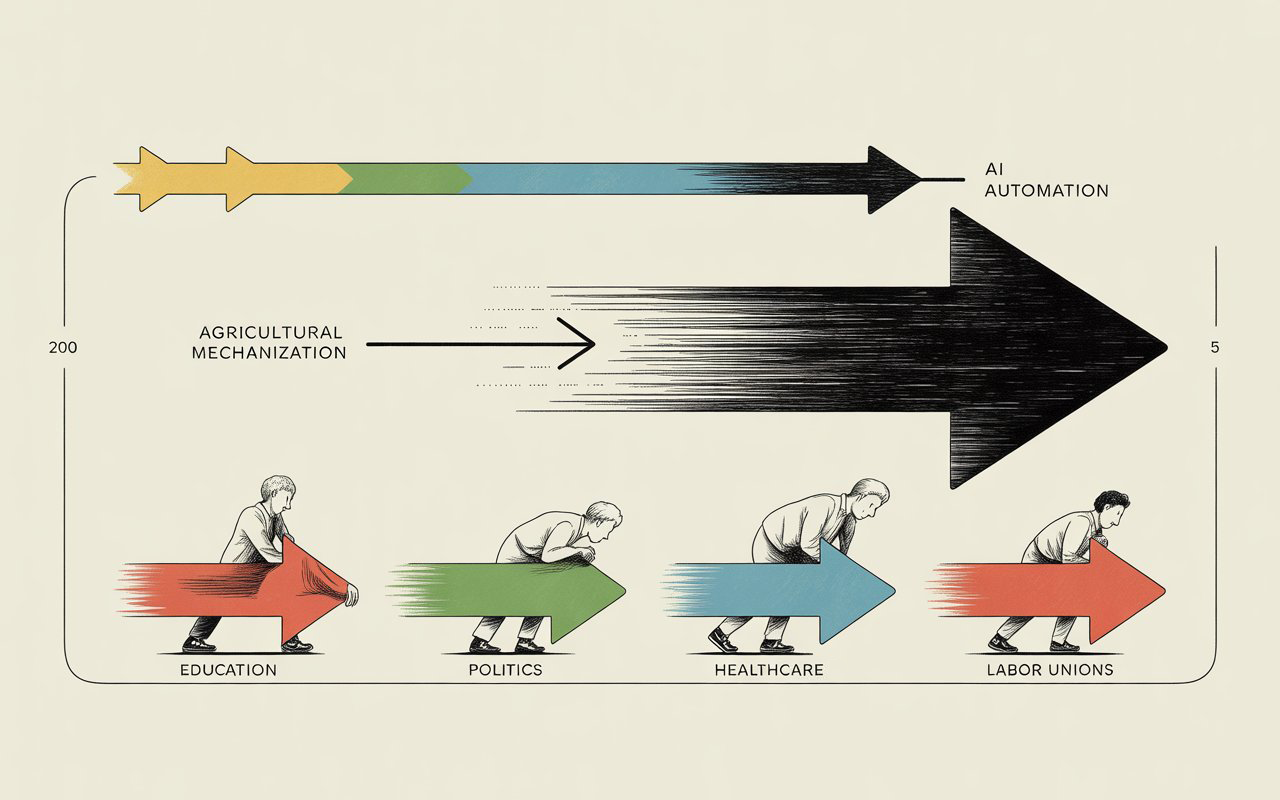

What took 200 years for farmers will take 5 for us. We're next.

Progress moves at the speed of Moore's Law now. Unfortunately, so does displacement.

I'm a systems architect. Pattern recognition is what I do. I see around corners. I map feedback loops. I identify failure modes before they cascade.

And I'm telling you: we're screwed. The mechanism is already in motion.

Not because I have some special insight into AI that you don't. But because I've seen this exact pattern before. Different technology. Same mechanism. Same outcome.

Agriculture got mechanized over 200 years. Farms went from employing dozens to employing four. Wealth concentrated in equipment manufacturers. Rural communities hollowed out. Expertise went extinct.

We called it progress.

Now run the same loop on knowledge work—only compressed.

It's happening to the educated professional class, and the timeline isn't centuries. It's single digits.

I'm not a farmer. Neither was my grandfather. But I grew up in Saskatchewan. I dated farmers' daughters. I heard the stories about communities that used to work, knowledge that used to matter, people who did everything right and still lost everything.

I used to think those stories were about them—the unlucky ones who worked with their hands instead of their minds.

Turns out they were about us. We just haven’t wanted to admit it.

The Pattern

Here's what I see when I map the system:

- Input: New technology increases productivity per worker

- Mechanism: Competitive pressure forces adoption

- Output 1: Fewer workers needed (efficiency!)

- Output 2: Workers become dependent on technology they don't own (uh oh)

- Output 3: Wealth concentrates with technology owners (oh f@%)

- Output 4: Expertise goes extinct through non-practice (irreversible)

- Feedback Loop: Each efficiency gain increases competitive pressure for next gain

- Terminal State: Maximum productivity, minimum ownership, all value flows to platforms

This isn't speculative. It's a repeated architecture we’ve seen in different guises.

Agricultural mechanization followed this exact sequence. We're doing it again, except faster and to a different class of workers.

The farmers couldn't see the pattern because they were inside it. They just knew they had to buy the tractor or go bankrupt.

We have the abstraction layer. We can see the whole system.

We're running it anyway.

The clearer we see it, the faster we can accelerate it.

The Evidence

I used AI to help structure this essay. Not because I can't write—I've been doing this for years. But because I'm on deadline and it's faster, and if I don't use every advantage available, I'm competing against people who will.

That's the trap.

Individual optimization. Collective catastrophe.

Every single one of us is making the locally rational choice (use the AI, stay competitive) that produces the globally catastrophic outcome (train your replacement, lose your livelihood).

Game theory calls this a prisoner's dilemma. Systems thinking calls it a tragedy of the commons.

I call it what it is: We're all teaching the robots that will replace us because not doing it means getting replaced first.

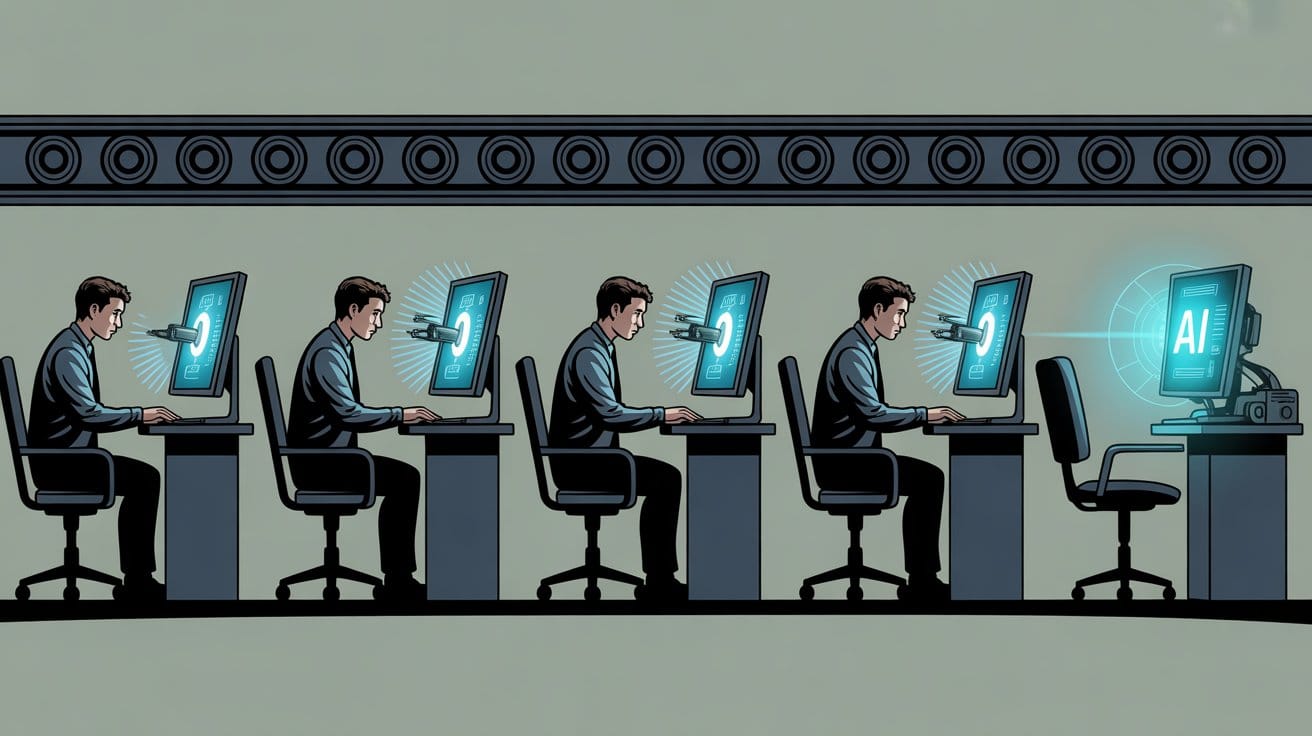

Stage 1: Enhancement (We are here) You use AI for email drafts, research summaries, code boilerplate. You tell yourself you're "augmenting" your capabilities. You're generating training data.

Stage 2: Dependence (1-2 years out) Your productivity doubles with AI. Your boss expects more output. Your pay doesn't increase. You stop practicing manual tasks—they're slower. But that practice was how you stayed sharp.

Stage 3: Skill Atrophy (2-3 years out) The AI is now better at those tasks than you are. Not because it's smarter. Because you stopped practicing. Your intuition dulled. Your judgment weakened.

Stage 4: Reclassification (3-4 years out) Your job becomes "reviewing AI output." That's not a skilled position. That's quality control. Lower pay grade. Less expertise required. You're now an AI operator.

Stage 5: Obsolescence (5 years out) Even review gets automated. The AI has processed millions of examples—including yours. You taught it how you think. Now it thinks like you, but faster and for free.

Enhancement feels like control. Dependence feels like efficiency. Atrophy feels like convenience. Reclassification feels like a title change. Obsolescence feels like silence.

This isn't a prediction. This is a systems diagram. I can draw you the feedback loops. I can map the timeline.

And I can't stop it. Neither can you.

The Saskatchewan Stories

Systems look clean on paper. Lives don't.

The farming families I knew had stories.

About grandfathers who employed 30, 40, 50 people during harvest. About communities built around threshing crews. About neighbors helping neighbors because you couldn't run a farm alone.

Then the combines came. Then GPS guidance. Then subscription-based seed genetics.

One guy told me his family's farm employed 47 people on 180 acres in 1952. Today, that same land—expanded to 900 acres—employs 4 people carrying $2.3 million in equipment debt.

Equipment they don't own. They lease it. From John Deere. Who can remotely disable the tractor if you miss a payment. Who charges thousands of dollars a year for the software license to operate a $250,000 machine you supposedly bought.

Try to repair it yourself? You void the warranty and break the contract.

His dad's margin last year? 2.3%. On $200,000 in revenue. He cleared $4,200. For a year of work. On land his family has owned for 90 years.

He's not a farmer. He's a John Deere operator who happens to work on land he technically owns but functionally rents equipment to work.

That's the endpoint of mechanization. You get more productive. You produce more output. You capture less value.

All the efficiency gains flow to whoever owns the platform. Change the nouns—tractors to models, seed genetics to datasets—and the math doesn't change. The same pattern is running on knowledge work right now—except it's moving ten times faster.

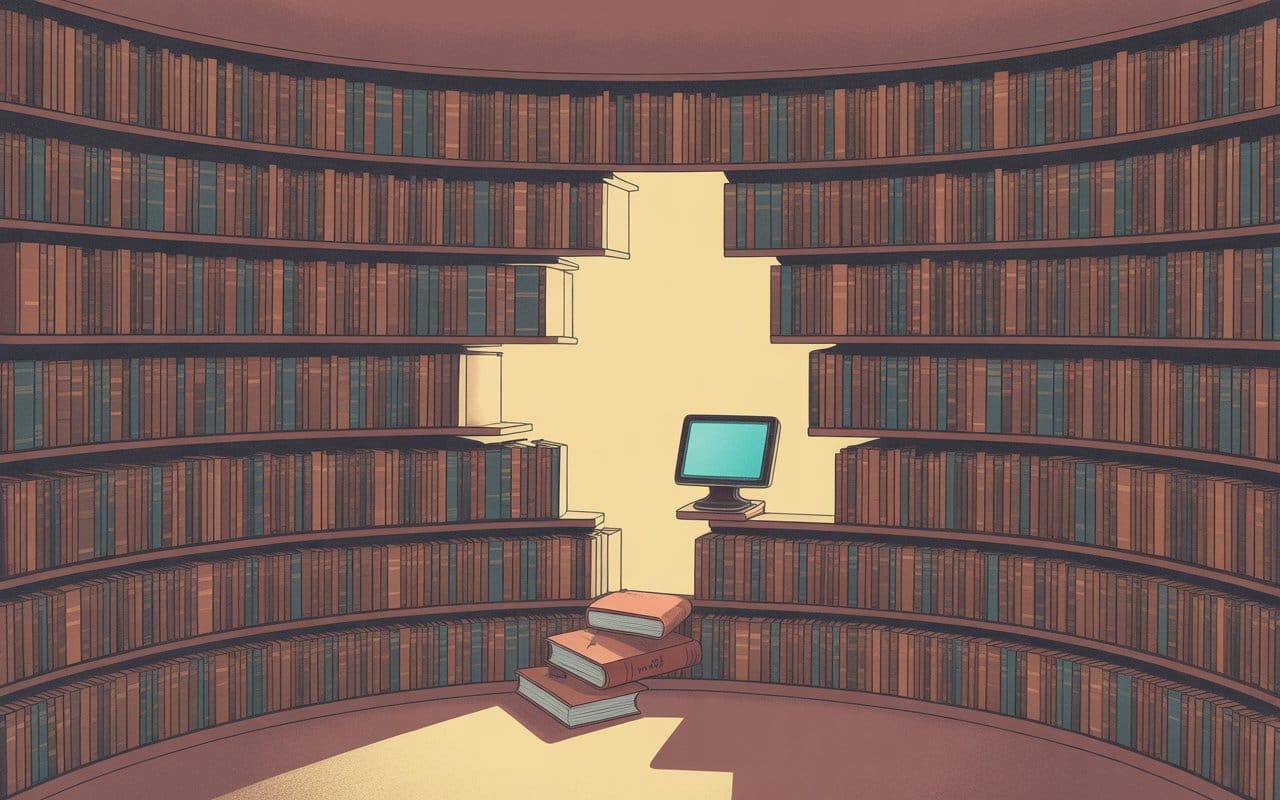

The Knowledge Extinction

Here's the part that freaks me out as someone who thinks in systems:

We're creating a cognitive monoculture.

In the 1800s, historian Dan Bussey documents that more than 16,000 unique apple varieties were grown in the United States and Canada. Today, about 15-20 varieties dominate over 90% of commercial production.

What happened to the other 15,980? They're extinct. Not "rare." Not "endangered." Extinct.

And it's not just the apples. It's the knowledge of which varieties grew best in which microclimates, how to graft them, what pests they resisted, what diseases they were vulnerable to, their flavor profiles.

That knowledge died with the farmers who held it.

Why does this matter?

Because monoculture is efficient but fragile. When 90% of production concentrates in 15 varieties, a single disease can devastate everything.

This already happened. The Gros Michel banana—the variety that dominated global trade until the 1950s—was wiped out by Panama disease. We switched to Cavendish bananas (what you eat now). Panama disease is evolving to kill those too.

We can't go back to Gros Michel. The plantations are gone. The expertise is gone. The genetic diversity is gone.

Now map this to cognitive work.

Radiologists being trained today learn with AI assistance from day one. They're not developing the pattern recognition their predecessors spent 10,000 hours building. They're learning to operate the AI and spot its errors.

Some will still push for independent mastery. A few programs will preserve it. But the center of gravity shifts toward the tool.

What happens in 2035 when the AI encounters something novel—something outside its training distribution? When there's no human left who developed independent diagnostic intuition? When the senior radiologists who could have caught it are all retired or dead?

Programmers learning to code with GitHub Copilot aren't learning algorithmic thinking. They're learning to prompt and debug.

Exceptions will exist. They won't be the norm.

What happens when a critical infrastructure system fails—power grid, financial platform, air traffic control—and the bug is subtle, outside the AI's training data? When there's nobody left who developed deep architectural understanding because everyone learned with AI assistance?

The system fails. And nobody knows how to fix it.

This is knowledge extinction at scale. And unlike agricultural knowledge—which some people wanted to preserve—nobody's even trying to save cognitive expertise because it looks inefficient. Wasteful.

Why train someone the slow way when the fast way exists?

Because resilient systems require redundancy. Monocultures are efficient until they fail. Then they fail catastrophically.

We're optimizing for speed. We're getting fragility.

Resilience looks wasteful until the day you need it.

The Wealth Transfer

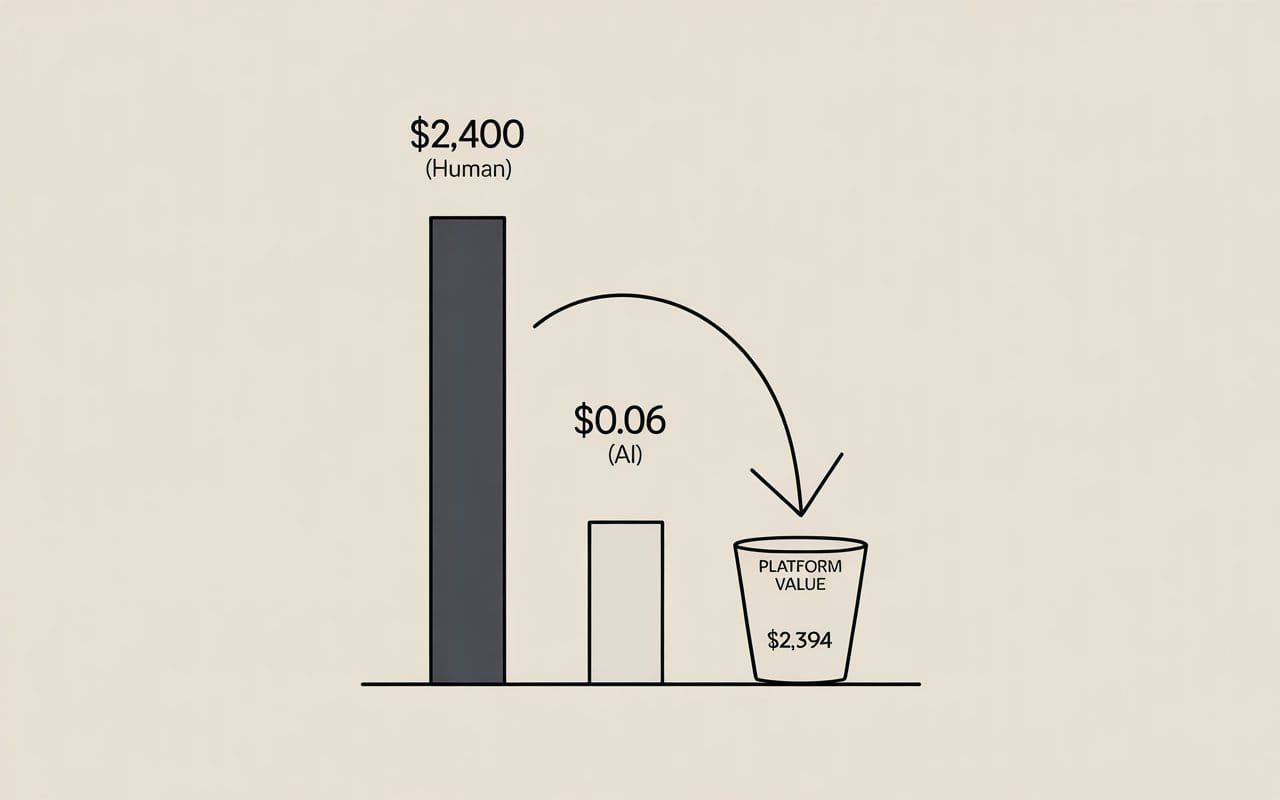

Let me show you the economic pattern with real numbers:

A legal research task: 6 hours of junior associate time at $400/hour = $2,400 Same task with ChatGPT: Under a minute = $0.06 (based on ChatGPT Plus subscription costs amortized per query)

The difference: $2,394

That's not just efficiency. That's value transfer—from labor to capital, from the junior associate to the platform.

The AI is 460 times faster. It costs 1/40,000th as much.

Multiply that across every task, every worker, every industry.

OpenAI's valuation went from roughly $20 billion in 2021 to $157 billion by late 2024. Where did that $137 billion in value come from in three years?

From the delta between what work costs with humans and what it costs with AI.

Quality, liability, and billing structures complicate this math, but the direction is clear.

That delta used to go to workers (wages) or consumers (lower prices). Now it goes to whoever owns the model.

Here's why this is different from every previous automation wave:

Traditional automation:

- Build factory → High capital cost

- Scale production → Need more machines, more space, more workers, more energy

AI automation:

- Train model → High initial cost

- Scale production → Just run more queries

- Marginal cost approaches zero

Want to double factory output? You need physical resources. Want to double AI output? You just... run it more. No additional labor. Minimal additional cost.

This is unprecedented in economic history.

And here's the feedback loop that makes it permanent:

Companies with AI make more money → More money means more data they can collect → More data means better models → Better models mean more competitive advantage → More competitive advantage means more money

The loop is self-reinforcing. OpenAI doesn't just have a good model. It has user data from millions of interactions, partnership data from enterprise deployments, capital to buy compute and talent, network effects where everyone uses it so everyone keeps using it.

How do you compete with that? You don't.

The wealth gap doesn't just grow. It explodes.

Once the flywheel spins, it doesn't stop.

The Speed Problem

Systems can't adapt at speeds faster than their feedback loops.

Agricultural mechanization took about 40-50 years of serious disruption (1930s-1970s). That's roughly two generations. Society struggled to adapt even with that much time. Rural communities still haven't recovered.

AI is compressing that timeline to 5-10 years. Maybe less.

Education systems have 4-6 year feedback loops (degree programs). Can't adapt.

Political systems have 2-8 year feedback loops (election cycles, legislative process). Can't adapt.

Social safety nets have 5-10 year feedback loops (policy design, implementation). Can't adapt.

Individual careers have 5-15 year feedback loops (skill acquisition, experience). Can't adapt.

AI deployment has 6-12 month feedback loops.

The system disrupting everything operates 10x faster than any system designed to absorb disruption.

That's not a challenge. That's a structural impossibility.

And here's what makes it worse: AI improves AI development.

In agriculture, tractors didn't design better tractors. Human engineers were needed for each improvement. Linear improvement curve.

In AI, AI assists AI research. AI generates training data. AI optimizes architectures. Exponential improvement curve.

GPT-3 to GPT-4: about 2.5 years. GPT-4 to GPT-5: likely 1-2 years. Future iterations: potentially months.

The pace is accelerating, not stabilizing.

When the disruptor accelerates its own acceleration, everything else lags by definition.

Timeline compression means a single generation will face multiple obsolescence cycles. The same people who trained for a career will see it automated during their working lifetime. There's no generational buffer.

By the time we implement solutions to today's problems, the technology will have evolved three generations ahead.

The Purpose Problem

I think a lot. And this make me think a lot more...

Jobs can be replaced. Income can theoretically be redistributed. Social safety nets can theoretically be built.

Purpose can't.

Humans need to feel useful. We need our skills to matter. We need our expertise to have value. We need to feel like we're contributing something that couldn't exist without us.

For 10,000 years, most humans got that from work. From being useful. From having skills other people needed.

What happens when nobody needs your skills anymore?

When the thing you spent 20 years mastering can be done better by an algorithm that costs $0.06?

When your expertise—the thing you built your identity around—becomes worthless?

I heard stories about farmers who couldn't cope with being obsolete. Depression. Alcoholism. Suicide. Men who couldn't adapt to being useless.

"They just kind of... stopped," one person told me. "Like they couldn't figure out what they were for anymore."

That's coming for the professional class.

For lawyers, programmers, analysts, writers, designers—everyone whose identity is built around cognitive expertise.

We're not just facing unemployment. We're facing obsolescence. And humans don't handle obsolescence well. You can't UBI your way out of existential purposelessness. Money can buffer need. It can't manufacture meaning.

The End

If current trends continue, here's the class structure emerging:

- The AI Aristocracy (~0.1%): Own AI infrastructure. Possess preserved expertise (taught human-to-human at private institutions). Control wealth and political power. Effectively permanent ruling class.

- The Professional Class (~10%): Integrate AI into specialized services. Moderate wealth. Dependent on aristocracy's platforms. Status maintained through access and connections.

- The Operator Class (~40%): Use AI tools for low-skill work. Subsistence wages. No job security. Economically precarious.

- The Displaced Class (~50%): Skills obsoleted. Dependent on social welfare if it exists. No economic mobility. Generational poverty.

These are estimates, not certainties. The shape is the point.

This isn't dystopian fiction. This is the logical endpoint of current trends. The agricultural revolution created a similar restructuring.

It took 200 years. The communities it destroyed still haven't recovered.

We're doing it in 5-10 years.

Why We’re Going to Lose

I'm a systems architect. I solve coordination problems for a living.

And I'm telling you: this problem has no solution within the current system.

We can't stop individually. If I refuse to use AI, I'm less productive than my competitors. I lose my job. Individual resistance is economic suicide.

We can't organize collectively. You can't strike against an algorithm. Labor power comes from withholding labor. AI doesn't care if you withhold labor.

We can't regulate it. By the time regulations pass, three more model generations deploy. Political timelines are years. AI deployment is months. And even if the US regulates, China won't. National security overrides worker protection every time.

We can't build alternatives fast enough. New industries take decades to mature. AI can automate new industries from birth. There's no "safe" sector.

The system is a trap. And the trap is structural, not accidental.

We're in a coordination game where individual optimization produces collective catastrophe, and there's no mechanism for coordination that operates faster than the catastrophe unfolds.

In systems architecture, we call that a guaranteed failure mode.

Yet the impulse to survive wins every local decision.

The Pattern Completes

I see the pattern. I can map the system. I can predict the outcome. And I can't stop it.

Because I used AI to help structure this essay. I'm using AI to edit it. Right now. Not because I'm weak or stupid, but because I have rent due and I'm competing with everyone else trying to harness AI to get everything done.

I know what I'm doing. I understand the system. I see around corners. I am fully aware that I’m training my replacement anyway.

Because the alternative is being replaced first.

In 200 years, someone will write about us the way I write about the farmers. About the educated professional class that had every advantage, every tool, every opportunity to resist.

And chose efficiency over purpose. Speed over meaning. Individual survival over collective wellbeing.

They'll wonder why we couldn't see it coming.

We could. We did.

We just couldn't stop ourselves.

The farmers lost because they didn't know what was coming.

We're going to lose knowing exactly what's coming.

That's worse.

Don't miss the weekly roundup of articles and videos from the week in the form of these Pearls of Wisdom. Click to listen in and learn about tomorrow, today.

Sign up now to read the post and get access to the full library of posts for subscribers only.

About the Author

Khayyam Wakil is an inventor and systems architect who grew up in Saskatchewan. He designs infrastructure, systems of intelligence, maps failure modes, and build things that don't break under pressure. Khayyam learned pattern recognition from watching wheat prices, weather, and the slow death of rural communities, among other things =)

Khayyam has spent two decades in tech, building platforms that scale, optimizing processes that seemed unoptimizable, and solving coordination problems that seemed unsolvable. Khayyam understands how systems work, how they fail, and what happens when the feedback loops run faster than the humans inside them can adapt.

He writes about the intersection of technology and society, usually from the skeptical perspective of someone who's seen how quickly efficiency gains can become human losses. This essay represents 20.56% AI assistance and 79.44% human insight—a ratio he’s tracking because it matters, and because he suspect it won't stay that way for long.

For speaking engagements or media inquiries: sendtoknowware@protonmail.com

Subscribe to "Token Wisdom" for weekly deep dives and round-ups into the future of intelligence, both artificial and natural: https://tokenwisdom.ghost.io

#AIautomation #futureofwork #systemsthinking #jobdisplacement #techcriticism #wealthinequality #knowledgework #purposecrisis #progresstrap #whitecollar #economicconcentration #skillsatrophy #longread | 🧮⚡ | #tokenwisdom #thelessyouknow 🌈✨

Member discussion