The collapse of institutions comes not with a bang, but with decades of quiet neglect disguised as efficiency.

— According to Noam Chomsky, whose critiques of neoliberalism are now routinely cited in tech company diversity reports while they continue to lobby against labor protections and public oversight

The Token Wisdom Rollup ✨ 2025

One essay every week. 52 weeks. So many opinions 🧐

"The AI Alibi"

How we blamed robots for the mess we made…

With regards and in response to the following:

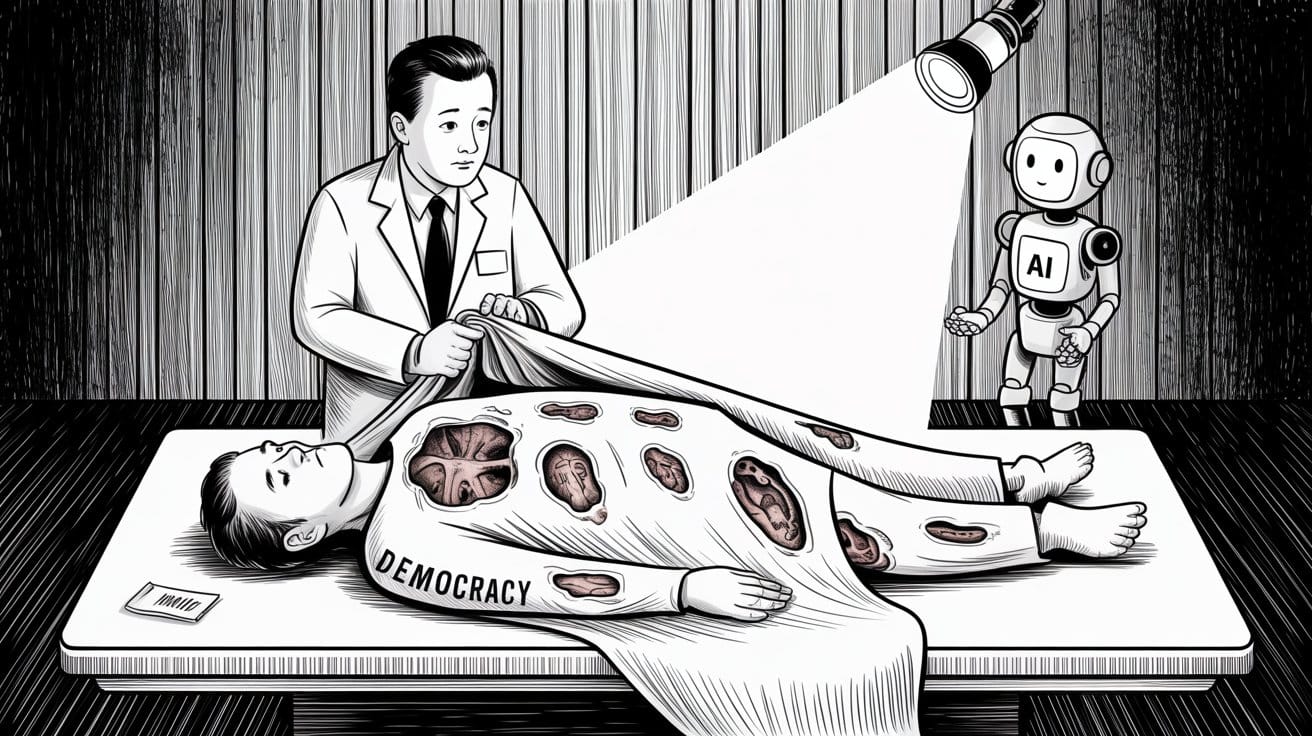

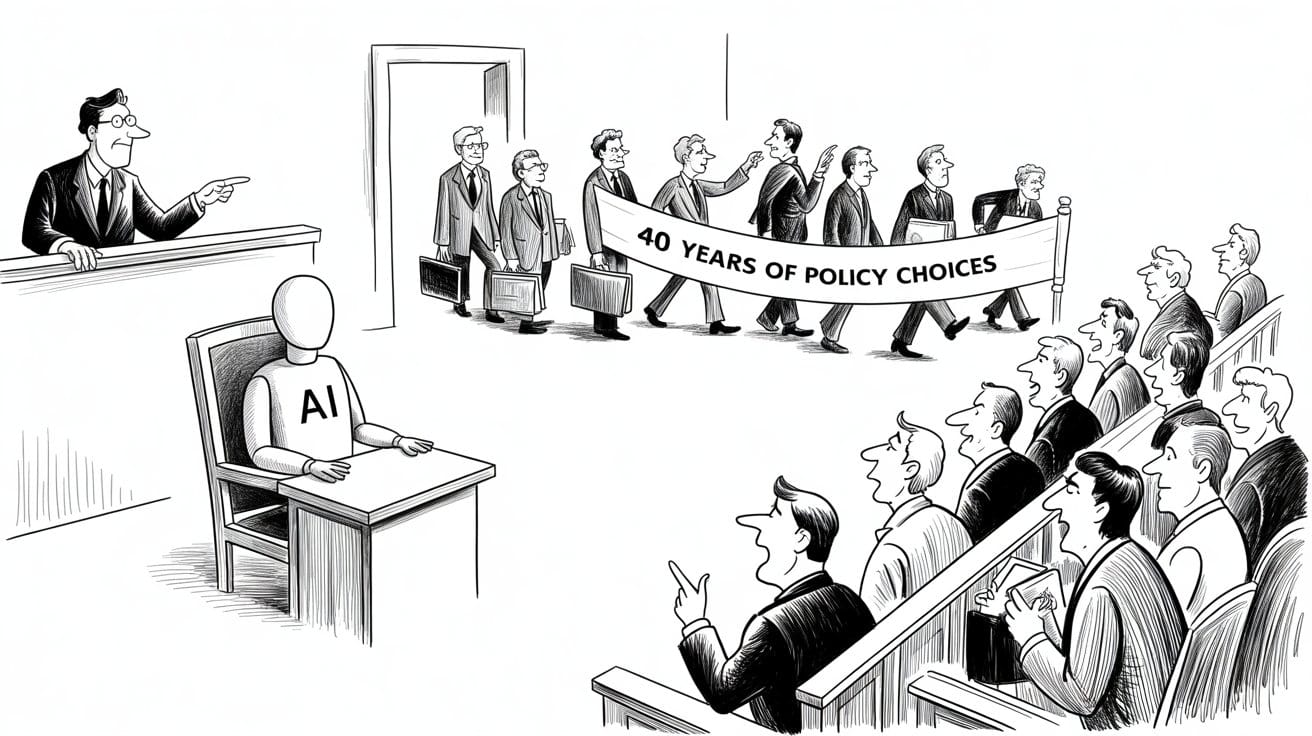

The timeline seemed obvious: artificial intelligence arrives, democracy dies. Except the autopsy tells a different story. The patient had been bleeding out for forty years. AI didn't pull the trigger, it just turned on the lights in the morgue.

But here's the interesting part: this whole story is backwards. AI isn't destroying our institutions. It's just showing us what we'd already broken. We spent forty years gutting these institutions ourselves defunding, deregulating, and turning public goods into profit centers. ChatGPT didn't poison a healthy democracy. ChatGPT didn't poison a healthy democracy. It arrived to find the patient already flatlined.

The timing was just too perfect, wasn't it? AI goes mainstream right as everything's falling apart. So we choose the comfortable narrative: blame the newcomer instead of confronting our complicity.

Look, The Numbers Don't Lie.

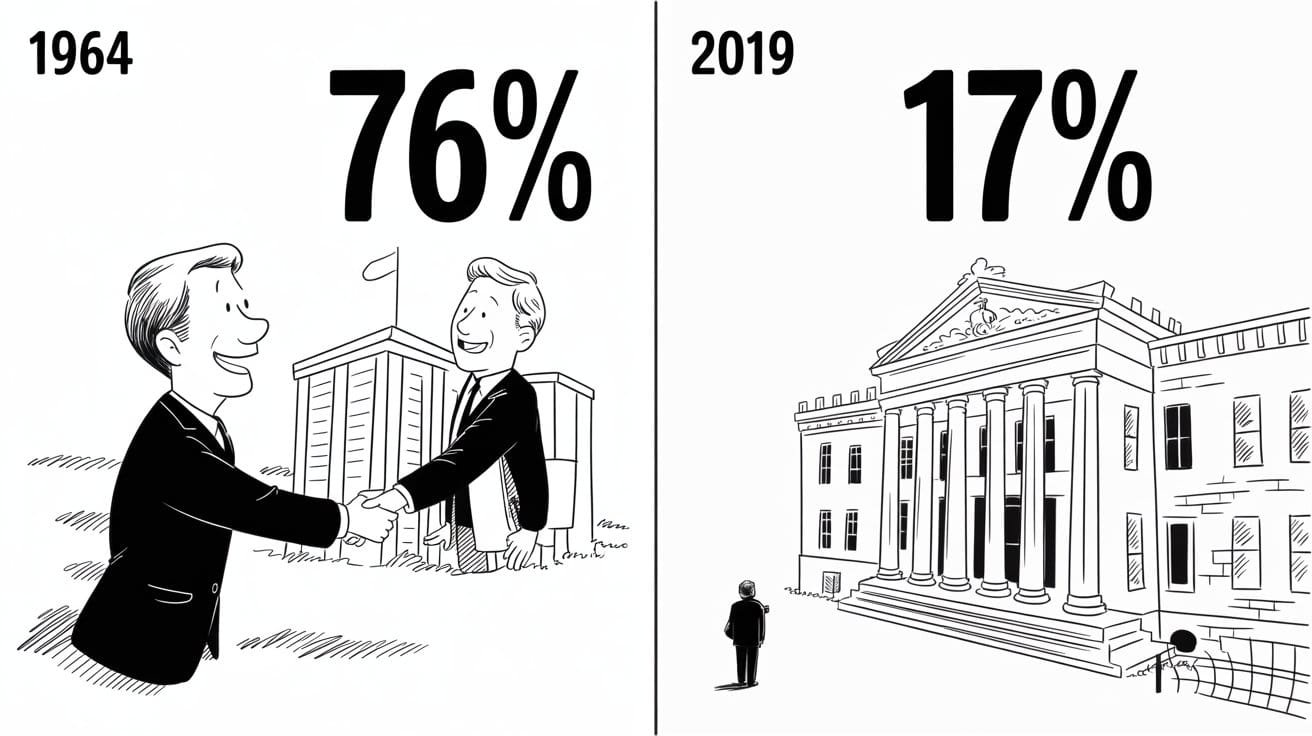

Back in 1964, 77% of Americans trusted their government. [1] By 2019—three years before ChatGPT was even a thing—that number had cratered to 17%. [2] We're not talking about a gentle slope here. This was a nosedive.

Same thing happened to higher education. In 1975, if you were a professor, you probably had tenure, 57% did, anyway. [3] Fast forward to 2021, and only 24% have that security. Everyone else? Adjuncts. Underpaid, overworked, scrambling between campuses just to make rent.

Meanwhile, states slashed university funding by 40% per student. [4] Student debt went from $24 billion to $1.7 trillion. [5]

Pew Research Center. (2021). "Public Trust in Government: 1958-2021." Retrieved from https://www.pewresearch.org/politics/2021/05/17/public-trust-in-government-1958-2021/ ↩︎

Pew Research Center. (2019). "Public Trust in Government: 1958-2019." The 2019 pre-COVID measurement showed trust at approximately 17%, continuing a decades-long decline. Retrieved from https://www.pewresearch.org/politics/2019/04/11/public-trust-in-government-1958-2019/ ↩︎

American Association of University Professors (AAUP). (2021). "The Annual Report on the Economic Status of the Profession, 2020-21." Academe, 107(2). Data shows decline from 57% tenure-track in 1975 to 24% in 2021. Retrieved from https://www.aaup.org/report/annual-report-economic-status-profession-2020-21 ↩︎

Center on Budget and Policy Priorities. (2020). "State Higher Education Funding Cuts Have Pushed Costs to Students, Worsened Inequality." Analysis shows per-student funding declined approximately 40% from 1980-2020 levels in inflation-adjusted dollars. Retrieved from https://www.cbpp.org/research/state-budget-and-tax/state-higher-education-funding-cuts-have-pushed-costs-to-students ↩︎

Federal Reserve Bank of New York. (2022). "Quarterly Report on Household Debt and Credit." Student loan debt reached $1.75 trillion in Q4 2022, up from $240 billion in 2003. Retrieved from https://www.newyorkfed.org/microeconomics/hhdc ↩︎

This wasn't an accident. Reagan gutted education funding in the '80s. States said "sounds good" and followed along. Universities panicked and did what any business would do: raised prices and cut labor costs. Suddenly you had professors getting paid per class like substitute teachers, while administrators multiplied like rabbits.

The university became a corporation. Learning became a side business.

The corporate university was born. Education? Secondary to revenue optimization.

So when ChatGPT showed up, what did it find? Expertise had already evacuated. Those professors who used to guide students through deep intellectual work? Gone. Today they’re replaced by adjuncts juggling five classes bouncing back and forth from three campuses. Students buried in loans, working nights and weekends, treating their education like a costly transaction—pay up, get certified, get out.

Did AI cause that mess?

Or was it forty years of treating education like a private commodity instead of a public good?

Journalism? Same pattern.

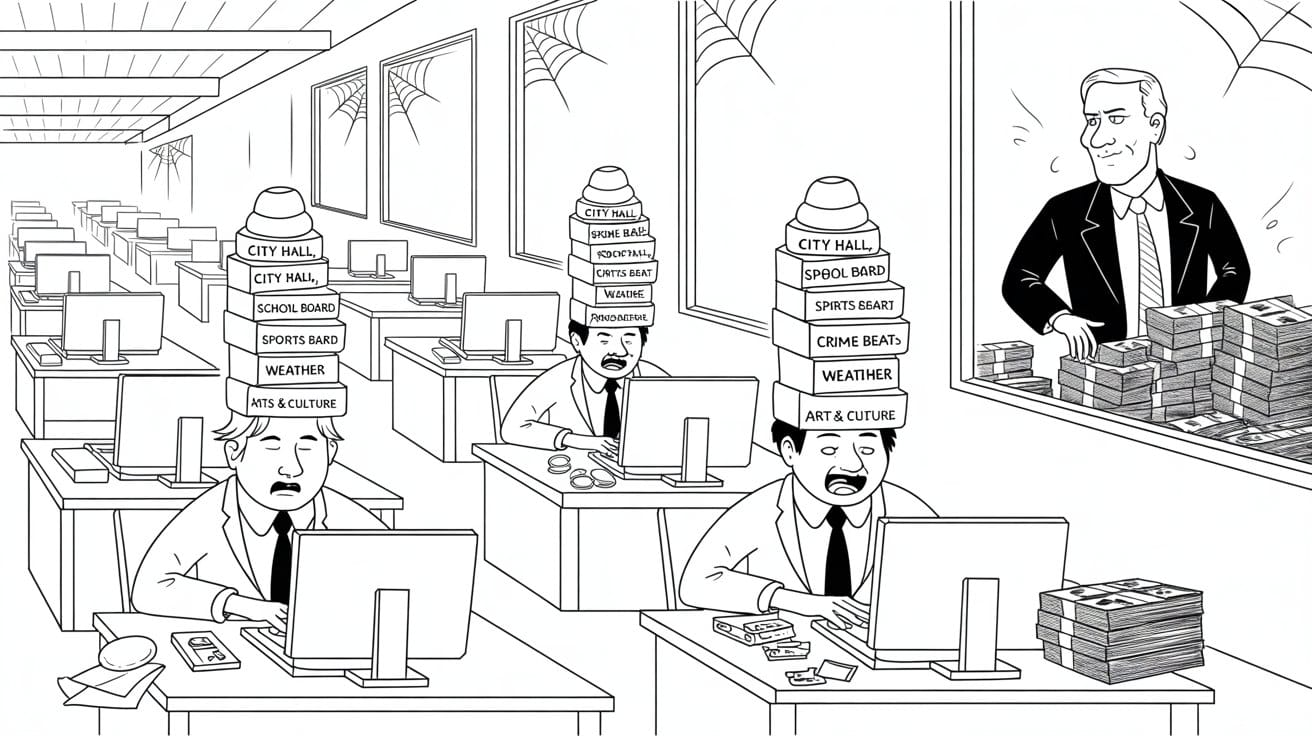

Between 2005 and 2020, newspaper advertising dollars plummeted by 82%.[1] Newsroom employment dropped 26% in just twelve years, from 2008 to 2020.[2] More than 2,500 newspapers closed their doors for good.[3] Nearly eighteen hundred communities found themselves without any local news coverage whatsoever.[4]

Pew Research Center. (2021). "Newspapers Fact Sheet." Newspaper advertising revenue fell from $49.4 billion in 2005 to $8.8 billion in 2020, an 82% decline. Retrieved from https://www.pewresearch.org/journalism/fact-sheet/newspapers/ ↩︎

Pew Research Center. (2021). "U.S. Newsroom Employment Has Fallen 26% Since 2008." Analysis of Bureau of Labor Statistics data. Retrieved from https://www.pewresearch.org/short-reads/2021/07/13/u-s-newsroom-employment-has-fallen-26-since-2008/ ↩︎

Medill School of Journalism. (2022). "State of Local News 2022." Research documents closure of more than 2,500 newspapers (approximately 25% of all newspapers) between 2005 and 2020. Retrieved from https://localnewsinitiative.northwestern.edu/research/state-of-local-news/ ↩︎

Abernathy, P. M. (2020). "News Deserts and Ghost Newspapers: Will Local News Survive?" University of North Carolina Hussman School of Journalism and Media. Documents 1,800+ communities losing local news coverage. Retrieved from https://www.usnewsdeserts.com/ ↩︎

And all this before "GPT entered the chat.”

The timeline is brutal. Craigslist killed classified ads, the money that had funded investigative reporting for decades. Then Google and Facebook hoovered up 60% of digital advertising revenue,[1] leaving newspapers fighting over scraps. Private equity swooped in on the wounded survivors, extracted what value remained, then shuttered the newsrooms.

eMarketer. (2019). "Digital Ad Spending 2019." Google and Facebook collectively captured approximately 60% of U.S. digital advertising revenue by 2019. Retrieved from https://www.emarketer.com/content/google-facebook-ad-dominance ↩︎

Your local paper, if it still exists? Three people producing content for six different outlets, all owned by the same hedge fund. The statehouse bureau closed in 2012. Investigative team got axed in 2015. What's left are twenty-somethings earning thirty grand a year, trying to cover city council and the school board and the county commissioners and somehow still pay rent.

AI didn't kill journalism. It arrived to find the corpse still warm.

Running the Re-Runs

AI seems different. Faster, scarier, more... how you say… everywhere all at once?!

And this, dear viewer, is where our story takes a turn. What are we actually scared AI will do? Plot twist! It's already happening.

Worried about AI undermining expertise? That ship already sailed. Universities gutted their own expertise with the adjunct economy, replacing tenured professors with contract workers.

Newsrooms did the same thing, laying off seasoned reporters and keeping the mastheads. The expertise evacuated years ago. What's left are just the brand names.

Worried about algorithmic black boxes? Try figuring out how plea bargaining actually works. Or why prosecutors decide what they decide. Or, my personal favorite, why exactly zero bank executives went to prison after they crashed the entire economy in 2008.[1]

The system was already a black box. We just had a fancier name for it: "discretion."

Afraid of AI-induced social isolation? Robert Putnam wrote Bowling Alone in 2000,[2] documenting how American community was already shredding. Civic participation dropping since the '60s. TV keeping people indoors. Suburban sprawl. Economic anxiety. Two-income households where nobody has time for the PTA. People moving cities for work, leaving behind any roots they'd managed to grow.

Eisinger, J. (2017). The Chickenshit Club: Why the Justice Department Fails to Prosecute Executives. New York: Simon & Schuster. Documents the lack of prosecutions of major banking executives following the 2008 financial crisis despite widespread evidence of fraud and misconduct. ↩︎

Putnam, R. D. (2000). Bowling Alone: The Collapse and Revival of American Community. New York: Simon & Schuster. Comprehensive documentation of social capital decline from 1960s-2000, covering civic participation, social trust, and community engagement across multiple metrics. ↩︎

All happening decades before transformers entered the chat.

The stats tell the story: voting rates down, church attendance down, union membership in free fall. PTA participation cut in half. Even bowling leagues—peak Americana—lost 40% of their members. (The twist? More people were bowling than ever. They just weren't bowling together.)

The causes weren't technological. They were structural. Car-dependent suburbs isolated people in their separate boxes. Economic restructuring meant longer hours, second jobs, constant relocation for work. The safety net unraveled. Inequality exploded.

By 2000, Americans were working longer hours, moving around more for jobs, and trusting each other less. We weren't losing our sense of community, we'd already lost it.

So when people start forming relationships with AI chatbots today, they're not abandoning human connection. They're medicating a wound that was already festering. The rise of AI companions isn't a testament to how seductive the technology has become, it's evidence of how starved for connection we already were.

If anything, AI boyfriends and girlfriends are just the latest symptom of a social crisis decades in the making.

The Convenience of Blaming Silicon

What makes AI such an attractive scapegoat? Simple, it lets us off the hook.

Technology feels like something you can actually fix. There's code, there are programmers, there are hearings with people in suits asking important-sounding questions. It feels manageable.

What you can't easily fix is forty years of choosing money over people, every single time.

- Reagan convinced everyone that government was the problem.

- Clinton shipped jobs overseas and called it progress.

- Bush handed out tax cuts like party favors.

- Obama delivered soaring rhetoric about change while ensuring the financial architects of the crisis faced no real consequences.

Bipartisan consensus was ironclad, as was their faith the markets would fix everything. Public investment is socialism. Inequality just means the system's working.

Confronting this stuff means admitting our own complicity. We voted for the politicians who defunded universities. We cheered the "disruption" that killed newspapers. We bought into the idea that everything—schools, hospitals, newsrooms, communities—should run like a business.

Blaming AI is so much simpler. Turns fifty years of policy failure into a two-year tech problem. Get the AI ethics frameworks right, and we can skip the uncomfortable reckoning with deeper rot.

Everyone has run dozens of pieces this year about AI threatening democracy. How many about state disinvestment in higher education? About private equity strip-mining local newspapers? About public funding collapsing for anything that doesn't turn a quick profit?

AI makes for better headlines.

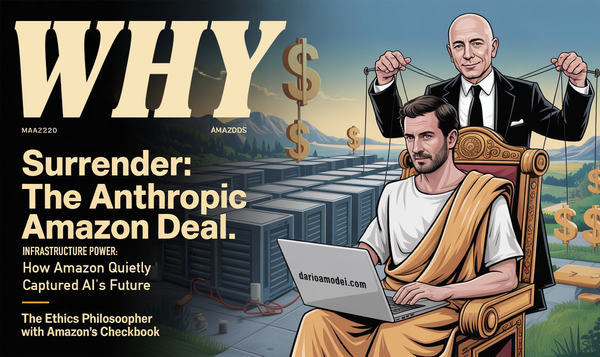

Who Benefits From the AI Panic?

Here's the thing about the AI panic, everybody wins.

Tech companies get to look responsible without actually changing anything. Hire some ethicists, publish some principles, show up to conferences. "Responsible AI" becomes just another brand position.

Politicians get amazing theater. AI hearings look like action without requiring them to do anything that might piss off donors. Want to actually fix things? That means raising taxes to fund universities. Fighting monopolies. Admitting that markets aren't magic.

Way easier to grill Mark Zuckerberg about algorithms.

And the rest of us? We get to feel like victims instead of accomplices. If AI is the bad guy, then we didn't vote for the people who broke everything. We didn't cheer when Amazon "disrupted" local businesses. We didn't click "agree" on terms of service we never read.

That's why the AI panic sticks. It's a convenient fiction that protects everyone from accountability.

What Happens If We Get This Wrong

Misdiagnose the problem, and your solution will suck.

Focus all your energy on AI ethics boards and transparency requirements, and you'll miss the real disease. Not that those things are useless, it’s stacks of Band-Aids on a severed artery. You can only do so much.

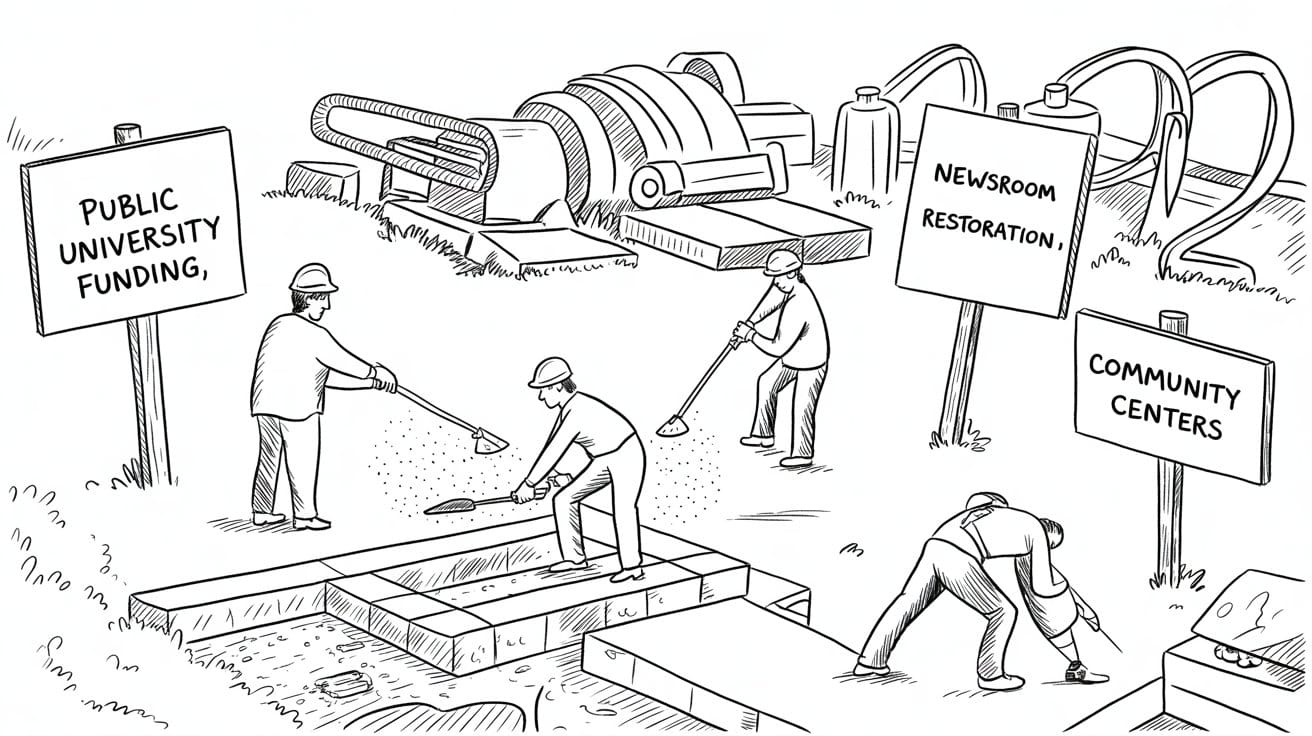

Want to actually fix this? Here's where we start: restore university funding to 1980 levels. States need to boost higher education spending by at least 40% minimum. Eliminate the adjunct racket entirely. Rebuild the tenure-track system that actually let professors profess instead of scramble between gig jobs. As for student debt? Wipe it clean. Every penny. It wasn't students failing the system, it was the system failing students.

Treat journalism like public infrastructure, because that's what it is. Fund it the same way we fund roads, schools, and fire departments. Information isn't a luxury good or a hobby for rich people to subsidize when they feel generous.

News is infrastructure, not entertainment. Look around the world: public broadcasting works. The BBC pisses people off regularly, but it's not folding. CBC drives Canadians crazy half the time, but it's still there covering federal elections. NPR makes everyone mad about something, but member stations aren't shutting down every month like newspapers.

Here's what we actually need: take that model and shrink it down. Every community gets a publicly funded newsroom. Not some grand metropolitan media empire, just three people who can cover city council without worrying about clicks. Reporters who can spend two months digging into why the school superintendent's contract is so weird, instead of churning out five stories a day about traffic accidents.

Journalism that doesn't have to be profitable to exist.

Democracy is too important to be held hostage by whatever tech bros are feeling this week. Public information, public funding—it's not complicated.

Break up Google and Facebook. Not because they're evil—though they might be—but because they're too big. No private company should control the information infrastructure of a democracy. Period.

Bring back antitrust that has teeth. Not the performance art where executives apologize to Congress and promise to do better. The kind that actually splits companies into pieces.

Tax wealth like we used to. Inequality didn't just happen, we designed it through tax policy. Why should investment profits get a discount that paychecks don't? Why should hedge fund managers pay lower rates than the firefighters protecting their mansions?

We had progressive taxation before. It worked. So that means we can do it again.

Fund the spaces where democracy actually happens. Libraries, parks, community centers and all the unglamorous stuff that keeps communities from falling apart. The places where you're stuck waiting in line with your neighbors, where your kid ends up playing with someone else's kid and suddenly you're talking to their parent about the pothole situation. Where you see the same faces at the grocery store and the school board meeting.

Make it free. Stop charging admission fees for basic human interaction. Stop acting like public space is a luxury we can't afford while we hand out tax breaks to Amazon.

Fund it properly. Make it free. Stop charging people admission fees just to exist around other humans.

In other words: reverse forty years of neoliberal policy.

That's the prescription.

You can't regulate AI if the institutions doing the regulating are already falling apart. It's like trying to perform delicate surgery while the operating room is flooding. We keep asking how to protect democracy from AI, but that's backwards. The question is: how do we fix democracy enough that it can actually handle AI?

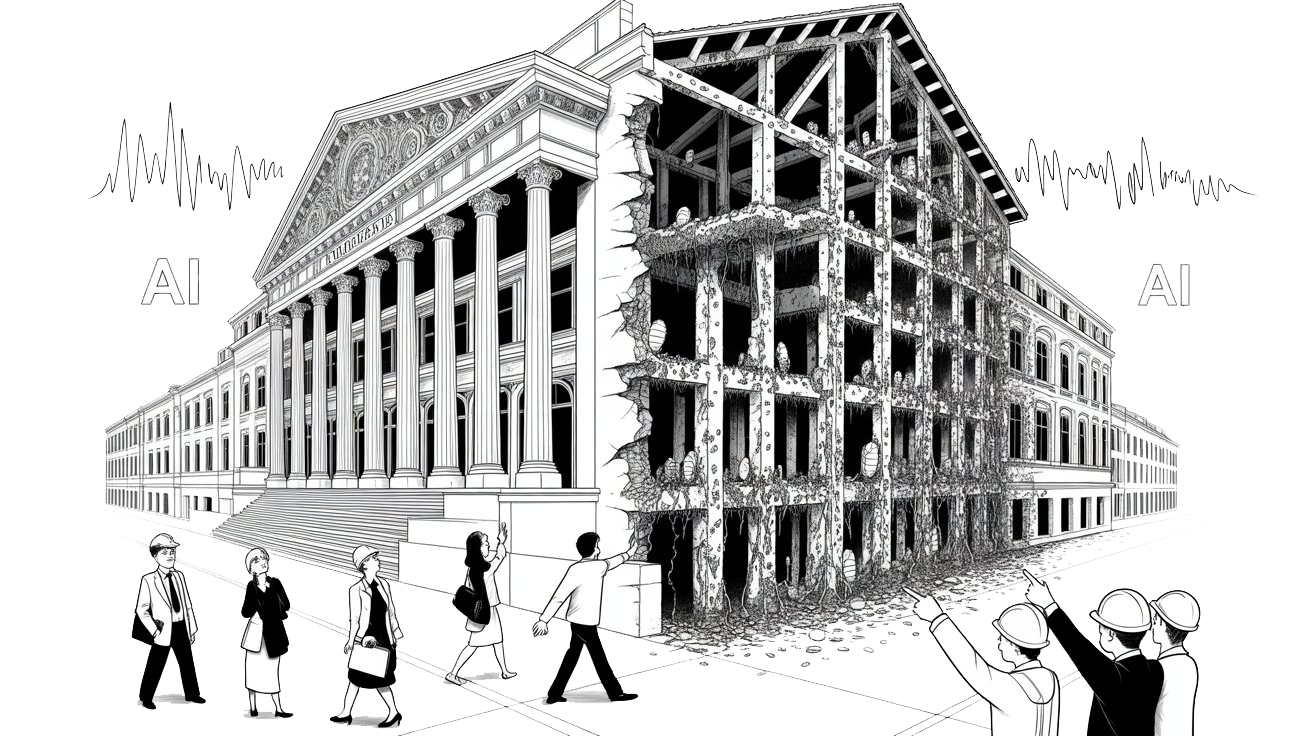

Earthquakes and the Termites

Here's a question structural engineers know well: What actually brings down a building?

Most people think they know the answer. The dramatic stuff gets the headlines, like earthquakes, hurricanes, the spectacular failures that make the evening news. But engineers will tell you something different: the real killers work in the dark.

Picture an old building that looks solid from the street. Century-old brick, good bones, built when people cared about craftsmanship. Pedestrians walk by every day, never questioning its permanence. The facade stays pristine, the windows get cleaned, fresh paint covers any surface cracks.

But deep in the foundation, termites have been feeding for decades. Silent, systematic, invisible from outside. Every wooden beam a little more hollow. Every support just a little less supportive. The building stays upright through momentum and habit, until the day something shakes it hard enough to reveal what's actually left.

Then an earthquake hits. Not even a big one, maybe a 4.2 that wouldn't faze a properly maintained structure. But this building? It pancakes. Complete collapse. Front page news.

What killed the building?

Most people blame the earthquake. The investigation focuses on seismic standards, building codes, earthquake preparedness. But the earthquake just finished what the termites started.

AI is the earthquake. Neoliberalism was the termites.

For forty years, we let market fundamentalism eat away at our institutional foundations. Every budget cut, every privatization, every time we chose efficiency over resilience; the termites kept chewing.

AI didn't knock down our democracy. It just shook hard enough to reveal what the termites had already hollowed out.

So here's the real question: Do we want to earthquake-proof the ruins? Or do we want to exterminate the termites and rebuild on solid ground?

Because you can write all the AI ethics frameworks you want. You can regulate algorithms until your eyes bleed. But if the foundation is still infested, the next shake will bring it all down again.

We blame the earthquake.

The Postmortem Report

Fifty years from now, historians will look back at 2022 and see something we missed: AI didn't break democracy. It just walked into the room and flipped on the lights.

What it illuminated was already there, institutions that had been gutted from the inside out, held upright by inertia and branded rhetoric. Universities that had fired their professors but kept their logos. Newsrooms that had laid off their reporters but still published under the same mastheads. A government that had outsourced so many functions to contractors that nobody could say who actually made the decisions anymore.

The AI panic gave us something to blame other than ourselves.

AI didn't break America. We broke America.

Forty years of choosing shareholder value over everything else. Turning schools into businesses, news into entertainment, healthcare into profit centers. Systematically weakening anything that might tell corporations "no."

ChatGPT ”entered the chat” at the end of 2022 and by then, American democracy was already in critical condition. Higher education was already a debt trap and journalism was already bleeding out. Social trust? Already extinct.

The AI panic serves a function. It transforms a structural crisis into a technological problem. Promises that AI ethics can somehow fix what decades of policy choices broke.

Funny thing is we can regulate AI all we want, we can build the most ethical algorithms in human history, and we can make every chatbot cite its sources and every deepfake carry a watermark.

And good old democracy held together with duct tape, some bubble gum, and wishful thinking.

Because the real problem was never artificial intelligence. It was our very human choice to treat everything—schools, hospitals, newsrooms, communities—like a business opportunity instead of a public good.

We broke democracy. Not with malice, but with forty years of seemingly reasonable choices that compounded into catastrophe. Every state budget that treated universities like luxuries. Every newspaper chain sold to private equity. Every time we said 'let the market handle it' instead of 'let's make this work.'

AI didn't cause the crisis. It just made it impossible to ignore.

The question isn't whether we can make AI safe for democracy. It's whether we can make democracy strong enough to govern AI.

That's not a technology problem. It's a choice.

The same choice we've been avoiding for four decades.

Don't miss the weekly roundup of articles and videos from the week in the form of these Pearls of Wisdom. Click to listen in and learn about tomorrow, today.

Sign up now to read the post and get access to the full library of posts for subscribers only.

About the Author

Khayyam Wakil is a systems theorist and researcher whose work focuses on the intersection of technology, institutions, and power.

He has been wrong about many things, but not about this.

"Token Wisdom" - Weekly deep dives into the future of intelligence: https://tokenwisdom.ghost.io

The Token Wisdom Rollup ✨ 2025

One essay every week. 52 weeks. So many opinions 🧐

#techethics #systemicchange #collectiveaction #futureof: #techindustry #platformcooperativism #labororganizing #techresistance #powerstructures

#leadership #longread | 🧠⚡ | #tokenwisdom #thelessyouknow 🌈✨

Member discussion