"The eye sees only what the mind is prepared to comprehend."

— According to Robertson Davies, who would be thoroughly amused that I'm using his quote about blind spots in an essay about my own blind spots. Meta enough for you, Robertson?

What 51 Weeks of Technology Analysis Deliberately Ignored

The OpenAI board crisis revealed more than organizational dysfunction—it exposed the blind spots in how we talk about technology itself...

When Sam Altman was fired and rehired within five days last November, the tech world erupted in analysis. Commentators dissected power dynamics, Effective Altruism, the tension between accelerationism and safety, and the future of AI governance.

I wrote about it too. I’d been planning to write about how the crisis illuminated control structures in AI development for this week’s essay. My planned analysis would have explored how the crisis illuminated control structures in AI development.

But what this crisis actually revealed was something more personal: my own blind spots—and our collective ones—in how we fundamentally analyze technology.

Every year, I write about everything. Everything from spatial computing to quantum mechanics, from digital biomarkers to blockchain governance. I've examined immersive experiences and pondered the future of intelligence. I've looked at how technology reshapes our perception, transforms healthcare, redefines what creativity even means, and restructures power.

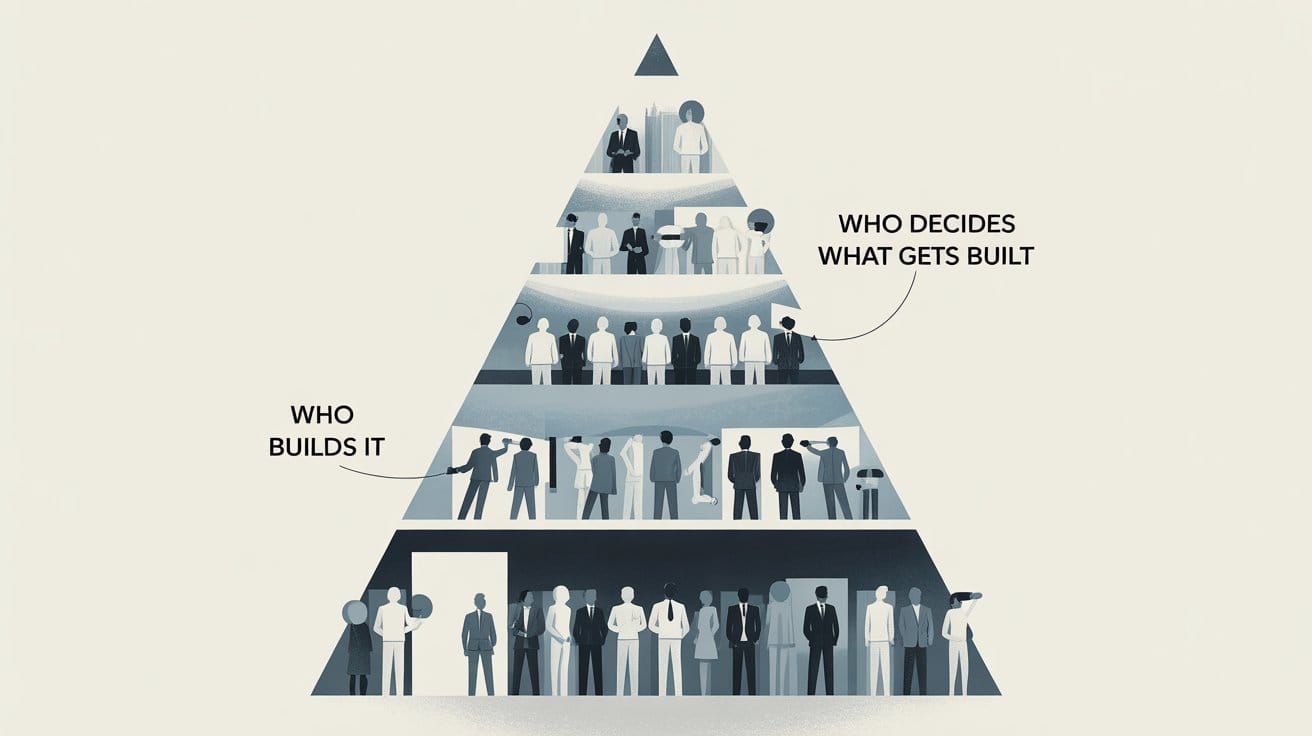

And throughout this entire year, I systematically avoided the most crucial questions: Who's building this? Who benefits? Who pays the price?

That's not accidental. That's architecture.

The Pattern You Can't See From Inside

I experienced something uncomfortable when I looked back at my work.

An analysis of my output—nearly 200,000 words across weeks, and months examining technology's impact on society—reveals telling priorities:

Heavy coverage:

- 📝📝📝📝📝📝📝📝 AI capabilities and limitations ( 8 articles)

- 📝📝📝📝📝📝📝 Immersive technology and spatial computing ( 7 articles )

- 📝📝📝📝📝 Healthcare transformation (5 articles)

- 📝📝📝📝 Quantum computing and deep tech (4 articles )

- 📝📝📝📝📝📝 Platform economics and business strategy (6 articles )

Minimal coverage:

- Gender dynamics in tech development: one passing mention

- Racial equity in AI systems: one brief discussion

- Climate impact of computing infrastructure: two partial articles

- Geographic inequality in technological access: implied once, never examined

- Labor conditions in the AI supply chain: completely absent

I didn't randomly distribute my attention this way. This pattern reflects a particular worldview—my worldview, it turns out.

The OpenAI Crisis as Mirror

When the Sam Altman drama unfolded, most of us tech analysts (myself included) gravitated toward it because it fit into frameworks we're comfortable with: classic board versus CEO tension, safety versus acceleration debates, institutional control struggling against charismatic authority. Familiar territory.

But here's what we consistently failed to examine:

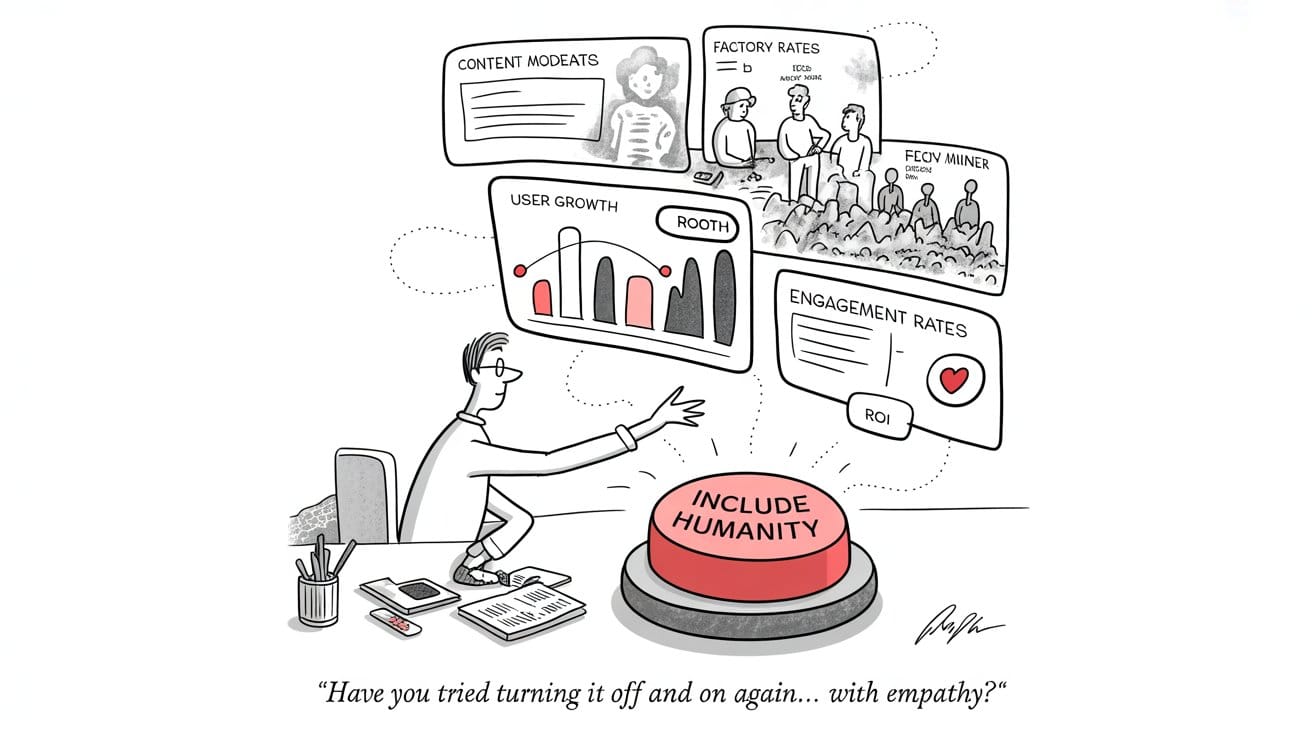

Who was in the room? The crisis played out among predominantly white, Western, male actors deciding humanity's future. Meanwhile, the people who will be most affected by AI—like content moderators in Manila working traumatic shifts, drivers whose livelihoods face automation, or communities disproportionately targeted by facial recognition—had absolutely no voice in the discussion.

Whose labor enables it? While we were busy debating philosophical approaches to AI safety, Kenyan workers were earning just $2/hour labeling horrifically traumatic content to train ChatGPT's safety filters. This isn't just a footnote to the crisis—it's the foundation these systems are built upon.

Whose knowledge was extracted? These systems rest on decades of unpaid creative labor: Indigenous knowledge, cultural expressions, and personal communications, all transformed into corporate assets without consent or compensation.

What's the climate cost? Training GPT-4 reportedly gobbled up more electricity than 1,000 American homes use in a year. Yet somehow this massive environmental impact barely registered as a footnote in the governance debate.

While the governance crisis merited attention, our selective analysis exposed our trained blindness to systemic issues.

What I Got Wrong: A Systematic Review

I need to be specific about where I failed this year. Vague gestures toward "doing better" won't cut it—they never do.

The Gender Gap I Perpetuated

What I wrote: Week 9 examined NeRF and Gaussian Splatting—breakthrough 3D visualization technologies. I analyzed technical architectures, computational efficiency, potential applications.

What I missed: These technologies are being developed overwhelmingly by male researchers, for applications that tend to reflect traditionally masculine priorities—think gaming, military simulation, industrial design. And you know what's barely being explored? Applications that might prioritize care work, visualize domestic labor, or monitor maternal health. Not because they're technically impossible, but because they don't align with the interests of the people building these systems.

The pattern: I kept writing as if technology just emerges from some neutral space of technical possibility, rather than recognizing it springs from the specific interests—and blind spots—of its predominantly male creators.

The Racial Dynamics I Avoided

What I wrote: Week 17 on Explainable AI discussed transparency and interpretability. I examined why black-box algorithms pose governance challenges.

What I missed: Facial recognition systems have 34% higher error rates for darker-skinned women than lighter-skinned men. That's not just some technical hurdle we need to overcome—that's a choice about whose faces matter during training. I could have explored how demanding "explainability" hits differently when you're not the one being misidentified by law enforcement algorithms that might drastically alter your life.

The pattern: I treated AI bias as a technical problem requiring better methods, rather than as a power structure requiring redistribution of decision-making authority.

The Climate Crisis I Footnoted

What I wrote: Week 5 touched on AI's role in eco-energy optimization. Week 25 examined gravity batteries as alternatives to lithium. Both framed climate as a problem technology would solve.

What I missed: Data centers already gulp down about 1% of global electricity. By 2030, that could balloon to 8%. Every time I wrote glowingly about AI advancement, I was implicitly endorsing an infrastructure expansion that's accelerating climate change. And here's the kicker—the communities bearing the brunt of climate impacts (think Pacific islands slowly disappearing underwater, drought-stricken regions of sub-Saharan Africa, flood-prone Bangladesh) are the same communities that have the least access to these technologies' benefits.

The pattern: I kept treating computational resources like they're unlimited, acting as if climate impacts can be fixed with a few efficiency tweaks, and pushing environmental costs to the margins instead of seeing them as fundamental constraints on what we should be building.

The Geographic Concentration I Reproduced—Another Dimension of Exclusion

What I wrote: 51 articles analyzing technological transformation. Primary examples drawn from: Silicon Valley, U.S. healthcare, American platform companies, Chinese AI development, European regulation.

What I missed:

- Africa's mobile money revolution (more sophisticated than Western fintech)

- Latin American community mesh networks (alternatives to corporate platforms)

- Southeast Asian platform diversity (different engagement models)

- Indigenous technological integration approaches (genuine alternatives to Western frameworks)

The pattern: I defaulted to centering Western and East Asian technological development while treating the rest of the world as passive recipients of innovation rather than active, creative sources of it. As if Silicon Valley and Shenzhen are where all the "real" innovation happens.

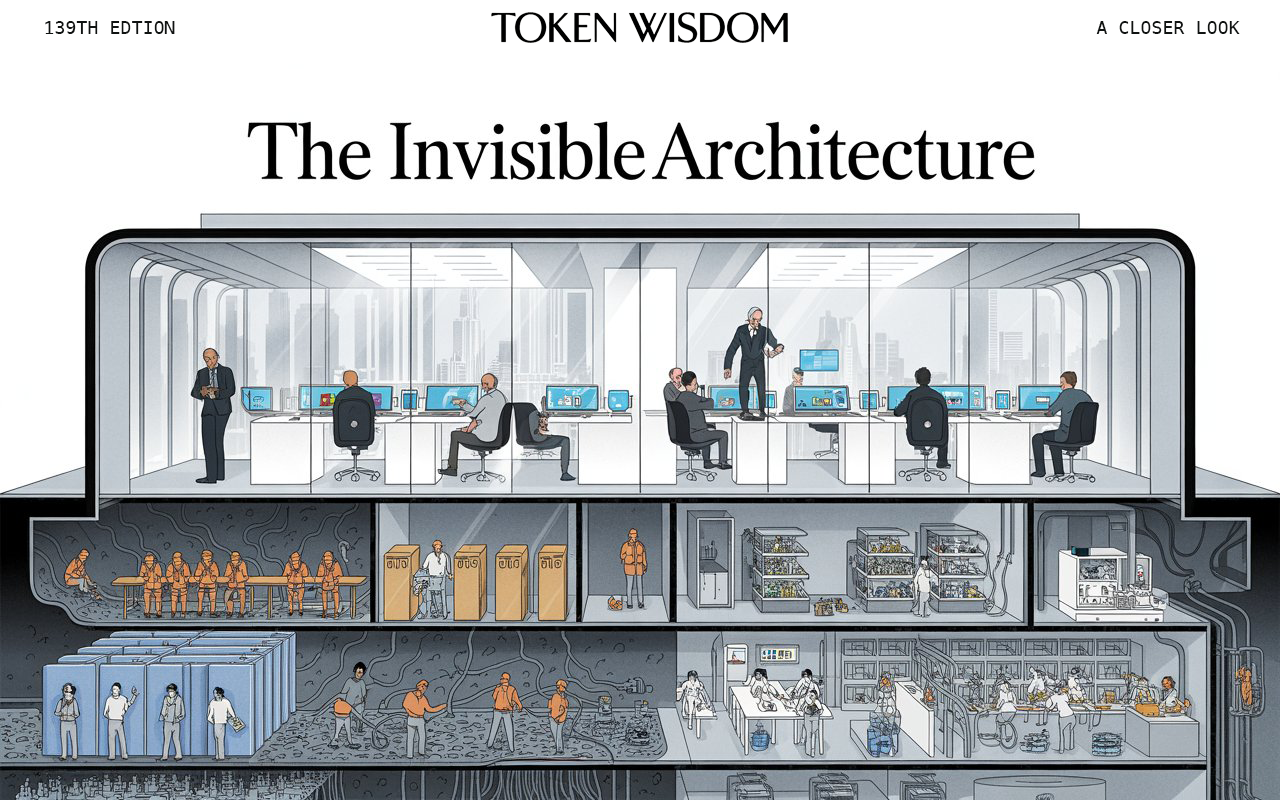

The Labor Invisibility I Maintained

What I wrote: Multiple articles on AI advancement, platform economics, content creation—examining systems from the top.

What I missed:

- Content moderators experiencing psychological trauma for minimal pay

- Gig workers with no employment protections enabling "innovation"

- Rare earth mineral extraction conditions supporting hardware production

- E-waste communities bearing toxic costs of planned obsolescence

The pattern: I analyzed glossy tech systems while systematically ignoring the exploited human labor that makes those systems possible. I wrote about the software but not the suffering.

Why These Gaps Aren't Accidental

Here's the uncomfortable truth I've had to face: I didn't just accidentally overlook these issues. I was methodically trained to overlook them—just like most technology analysts operating in our current system.

My background—immersive media technology, spatial computing, AI systems development—equipped me to analyze certain problems while remaining structurally blind to others.

What I was trained to see:

- Technical capability

- System architecture

- Market dynamics

- Innovation patterns

- Future possibilities

What I was trained to ignore:

- Power distribution

- Labor conditions

- Environmental cost

- Geographic inequality

- Gender dynamics

- Racial equity

I don't think this is just my personal failing. It's baked into the epistemic infrastructure of tech analysis. The very systems that trained me to do deep technical analysis simultaneously prevented me from seeing the social implications of that technology. It's a feature, not a bug.

The Feedback That Actually Stung

After publishing the cultural amnesia piece, I received pointed feedback:

"Limited Gender/Race Analysis: You examine power dynamics through technological and economic lenses, but questions of who builds, who benefits, who bears costs along gender/racial lines remain largely unaddressed."

My first reaction was defensive—I can still feel the sting of it: "But I write about technology, not sociology!"

Then I sat with it. Because that response reveals the problem perfectly.

The belief that technology can be analyzed separately from gender/race/labor/climate isn't neutral—it's ideological. It's the ideology that enables exploitation while maintaining plausible deniability.

When I write breathlessly about "AI advancement" without examining whose labor actually trains the models, that's not objective analysis—that's complicity through omission. I'm telling half the story and pretending it's the whole truth.

What Would Actually Comprehensive Analysis Look Like?

Let me redesign a single article to show what I mean.

Original Week 2: "WBAN Healthcare"

- Focus: Wireless body area networks revolutionizing medical monitoring

- Analysis: Technical capabilities, clinical applications, future potential

- Word count devoted to: technology (80%), medical benefits (20%)

- Word count devoted to: who manufactures sensors (0%), privacy implications for incarcerated people forced to wear monitors (0%), energy/resource cost of ubiquitous sensing (0%)

Redesigned Version:

- Section 1: Technical capabilities (what WBAN can do)

- Section 2: Who builds these systems and for whom (majority male engineering teams, predominantly serving wealthy patient populations)

- Section 3: Labor and supply chain (cobalt mining for batteries, manufacturing conditions, e-waste disposal)

- Section 4: Differential access (how WBAN reinforces healthcare inequality between Global North/South)

- Section 5: Climate implications (energy cost of continuous sensing)

- Section 6: Surveillance concerns (how medical monitoring infrastructure gets repurposed for social control)

- Section 7: Future possibilities (but now contextualized within power structures)

Same technology. Completely different analysis. But this version actually accounts for material reality instead of pretending innovation happens in some kind of frictionless social vacuum.

The Solutions Gap Is A Power Gap

Earlier feedback noted: "You excel at diagnosis. You're weaker on prescription."

I nodded along at first. "Yep, that sounds right. I'm definitely better at analysis than actionable frameworks."

But I've realized that's not quite it. The problem isn't that I'm bad at solutions—it's that I've been proposing solutions while completely ignoring the power dynamics that would make those solutions impossible to implement in practice.

Example—Week 44: "From Tasks to Systems"

I wrote about AI architecture to capture AI's potential.

What I completely ignored: When AI "transforms service industries," actual human beings lose their jobs. The "organizational architecture" changes I was cheerfully proposing meant displacing workers who had zero say in these decisions that would upend their lives.

My brilliant "solution" was actually creating a massive problem for everyone not sitting in management positions. But I couldn't see that because I was writing from—and for—a management perspective without even realizing it.

The real solution gap: I can't propose genuine solutions without first grappling with who actually has the power to implement them and whose interests they're designed to serve.

What Needs To Change (Starting With Me)

If I could go back and start this whole series over (and honestly, part of me wishes I could), here's the framework I'd use instead:

Every Technical Analysis Must Include:

1. Labor visibility

- Who builds this?

- Under what conditions?

- For what compensation?

- What extraction enables it?

2. Differential impact analysis

- Who benefits?

- Who bears costs?

- How does this reinforce or challenge existing inequality?

- What's the gender/racial dynamic?

3. Environmental accounting

- Energy cost

- Material extraction

- E-waste

- Climate impact

- Whose communities bear environmental burden?

4. Geographic distribution

- Where is this developed?

- Where is it deployed?

- Who's excluded?

- What alternatives exist in other regions?

5. Power structure mapping

- Who decides?

- Who's affected but excluded from decisions?

- What interests are served?

- What alternatives exist?

Every "Future of Technology" Article Must Ask:

- Future for whom?

- At whose expense?

- Who decides what future gets built?

- What futures are we not building and why?

The Deeper Pattern

But here's what's been gnawing at me as I've reflected on these 51 weeks:

Even this whole analysis I'm doing right now—this public confession of my blind spots—still centers my limitations rather than the people my analysis systematically erased.

I'm still making this fundamentally about "what I got wrong" rather than "whose experiences I systematically devalued." It's still all about me, isn't it?

The content moderators working grueling shifts in Manila don't need my confession—they need better working conditions, fair compensation, and actual psychological support for the trauma they experience daily.

The communities mining cobalt so we can have our devices don't need my belated acknowledgment of environmental costs. They need remediation of poisoned soil and genuine alternative economic opportunities.

The brilliant researchers across Africa developing genuinely innovative technologies don't need me to suddenly notice their erasure from my writing. They need platforms, funding, and the recognition they've always deserved.

The real question isn't "what did I miss?" It's "who am I writing for?"

If I'm writing primarily for technology executives, VCs, and innovation consultants—basically, people who have actual power to shape these systems—then maybe calling attention to these blind spots could serve some purpose.

But if I'm writing for general audiences while still centering elite perspectives and problems, then I'm just reproducing the exact power structures I'm claiming to critique. Which would make this whole exercise pretty hollow.

Beyond Blind Spots with a New Technology Analysis

The OpenAI crisis that started this whole reflection wasn't really about corporate governance—it was one of those rare moments when the technology industry briefly glimpsed its own machinery from the outside. For about five days, all our usual narratives stumbled and faltered, revealing the deeply human infrastructure that props up our technological systems.

What if we could build analysis that maintains that kind of clarity? What if technology writing refused to let us forget about the actual hands that are building our future?

For two years, I've been analyzing technology while systematically overlooking the human architecture that makes it all possible. I kept separating technical capability from social consequence, treating innovation as if it's neutral rather than shaped by very specific interests, presenting shiny advancements without examining their hidden costs.

That ends here.

Not because I've suddenly achieved perfect vision—I'm still operating within the same systems that trained my selective attention in the first place. But acknowledging blindness might be the first step toward actually seeing more clearly.

Next week, I'm committing to a fundamental shift: technology analysis that centers the people technology impacts rather than the technology itself. Analysis that doesn't just ask what's possible, but who gets to decide what's possible, who benefits from it, and who ends up bearing the costs. Analysis that treats labor conditions, environmental impact, and power distribution not as inconvenient footnotes but as absolutely central to understanding how technological systems actually work.

This isn't about performing guilt or putting on some virtue-signaling show. It's about finally producing analysis that describes actual reality rather than the carefully curated reality bubble the technology industry has constructed for itself.

The amnesia machine isn't neutral—it never was. It runs on code written by specific people with specific interests, gets deployed on infrastructure built through specific (often exploitative) labor practices, and gets optimized for very specific user populations while ignoring everyone else.

And I've been contributing to that amnesia by analyzing technology while systematically forgetting about the people who make it all possible.

Next week marks the correction. Not because I've suddenly figured everything out (I definitely haven't), but because I owe it to people who still read long form content from proper nerds—and especially to all the people my analysis erased—to at least try.

Don't miss the weekly roundup of articles and videos from the week in the form of these Pearls of Wisdom. Click to listen in and learn about tomorrow, today.

Sign up now to read the post and get access to the full library of posts for subscribers only.

About the Author

Khayyam Wakil is a systems theorist whose work spans immersive technology, spatial computing, and AI development. His early research on distributed visual processing helped lay the groundwork for modern immersive mapping systems. After two decades in Silicon Valley's technical infrastructure, he now writes about technology's human architecture—examining who builds our digital future, who benefits, and who bears the hidden costs.

Through his weekly analysis, he explores the intersection of technical capability and social impact, working to make visible the often-overlooked human infrastructure behind our technological systems. His current focus is on developing frameworks for technology analysis that center human impact alongside technical advancement.

His work bridges technology and human wisdom to create more empowering systems.

Contact: sendtoknowware@protonmail.com

"Token Wisdom" - Weekly deep dives into the future of intelligence: https://tokenwisdom.ghost.io

#technology #ethics #artificialintelligence #innovation #digitaltransformation #siliconvalley #futureofwork #techpolicy #sustainability #responsibleAI #digitalequity #techforgood #AIethics #socialimpact #longread | 🧠⚡ | #tokenwisdom #thelessyouknow 🌈✨

Member discussion